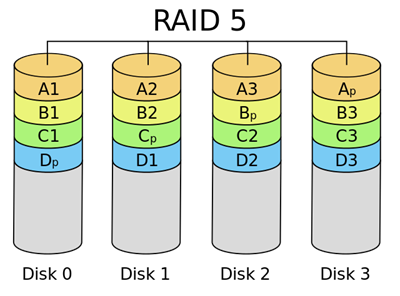

Parity protection is a common technique for reliable data storage on mediums that may fail (HDDs, SSDs, storage servers, etc.) Calculation of parity uses tools from algebra in very interesting ways, in particular in the dual parity case.

This article is for computer engineers who would like to have a high-level understanding of how the dual parity calculation works, without diving into all of the mathematical details.

Single parity

Let’s start with the simple case – single parity. As an example, say that you need to reliably store 5 data blocks of a fixed size (e.g., 4kB). You can put the 5 data blocks on 5 different storage devices, and then store a parity block on a 6th device. If any one device fails, you can still recompute the block from the failed device, and so you haven’t lost any data.

A simple way to calculate the parity P is just to combine the values a, b, c, d, e with the exclusive-or (XOR) operator:

P = a XOR b XOR c XOR d XOR e

Effectively, every parity bit protects the corresponding bits of a, b, c, d, e.

- If the first parity bit is 0, you know that the number of 1 bits among the first bits of a, b, c, d, e is even.

- Otherwise the number of 1 bits is odd.

Given a, b, c, e and P, it is easy to reconstruct the missing block d:

d = P XOR a XOR b XOR c XOR e

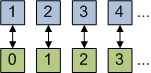

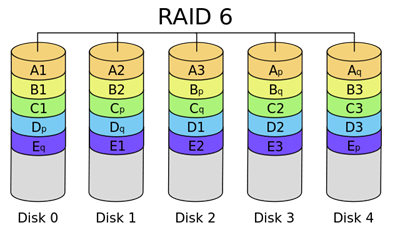

Straightforward so far. Exclusive-or parity is commonly used in storage systems as RAID-5 configuration:

RAID-5 uses the exclusive-or parity approach, except that the placement of parity is rotated among the storage devices. Parity blocks gets more overwrites than data blocks, so it makes sense to distribute them among the devices.

What about double parity?

Single parity protects data against the loss of a single storage device. But, what if you want protection against the loss off two devices? Can we extend the parity idea to protect the data even in that scenario?

It turns out that we can. Consider defining P and Q like this:

P = a + b + c + d + e

Q = 1a + 2b + 3c + 4d + 5e

If you lose any two of { a, b, c, d, e, P, Q }, you can recover them by plugging in the known values into the equations above, and solving for the two unknowns. Simple.

For example, say that { a, b, c, d, e } are { 10, 5, 8, 13, 2 }. Then:

P = 10 + 5 + 8 + 13 + 2 = 38

Q = 10 + 2 × 5 + 3 × 8 + 4 × 13 + 5 × 2 = 106

If you lost c and d, you can recover them by solving the original equations.

How come you can always solve the equations?

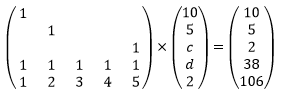

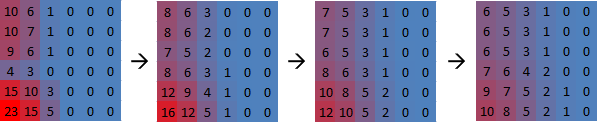

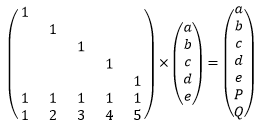

To explain why we can always solve the equations to recover two missing blocks, we need to take a short detour into matrix algebra. We can express the relationship between a, b, c, d, e, P, Q as the following matrix-vector multiplication:

We have 7 linear equations of 5 variables. In our example, we lose blocks c and d and end up with 5 equations of 5 variables. Effectively, we lost two rows in the matrix and the two matching rows in the result vector:

This system of equations is solvable because the matrix is invertible: the five rows are all linearly independent.

In fact, if you look at the original matrix, any five rows would have been linearly independent, so we could solve the equations regardless of which two devices were missing.

But, integers are inconvenient…

Calculating P and Q from the formulas above will allow you to recover up to two lost data blocks.

But, there is an annoying technical problem: the calculated values of P and Q can be significantly larger than the original data values. For example, if the data block is 1 byte, its value ranges from 0 to 255. But in that case, the Q value can reach 3825! Ideally, you’d want P and Q to have the same range as the data values themselves. For example, if you are dealing with 1-byte blocks, it would be ideal if P and Q were 1 byte as well.

We’d like to redefine the arithmetic operators +, –, ×, / such that our equations still work, but the values of P and Q stay within the ranges of the input values.

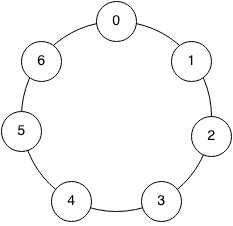

Thankfully, this is a well-studied problem in algebra. One simple approach that works is to pick a prime number M, and change the meaning of +, –, and × so that values "wrap around" at M. For example, if we picked M=7, then:

6 + 1 = 0 (mod 7)

4 + 5 = 2 (mod 7)

3 × 3 = 2 (mod 7)

etc.

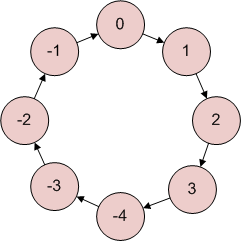

Here is how to visualize the field:

Since 7 is a prime number, this is an example of a finite field. Additions, subtractions, multiplications and even divisions work in a finite field, and so our parity equations will work even modulo 7. In some sense, finite fields are more convenient than integers: dividing two integers does not necessarily give you an integer (e.g., 5 / 2), but in a finite field, division of two values always gives you another value in the field (e.g., 5 / 2 = 6 mod 7). Division modulo a prime is not trivial to calculate – you need to use the extended Euclidean algorithm – but it is pretty fast.

But, modular arithmetic forms a finite field only if the modulus is prime. If the modulus is composite, we have a finite ring, but not a finite field, which is not good enough for our equations to work.

Furthermore, in computing we like to deal with bytes or multiples of bytes. And, the range of a byte is 256, which is definitely not a prime number. 257 is a prime number, but that still doesn’t really work for us… if the inputs are bytes, our P and Q values will sometimes come out as 256, which doesn’t fit into a byte.

Finally, Galois Fields

The finite fields we described so far are all of size M, where M is a prime number. It is possible to construct finite fields of size Mk, where M is a prime number and k is a positive integer.

The solution is called a Galois field. The particular Galois field of interest is GF(28) where:

- Addition is XOR.

- Subtraction is same as addition; also XOR.

- Multiplication can be implemented as gf_ilog[gf_log[a] + gf_log[b]], where gf_log is a logarithm in the Galois field, and gf_ilog is its inverse. gf_log and gf_ilog can be tables computed ahead of time.

- Division can then calculated as gf_ilog[gf_log[a] – gf_log[b]], so we can reuse the same tables used by multiplication.

The actual calculation of the logarithm and inverse logarithm tables is beyond the scope of the article, but if you would like to learn more about the details ,there are plenty of in-depth articles on Galois fields online.

In the end, a GF(28) field gives us exactly what we wanted. It is a field of size 28, and it gives us efficient +, –, ×, / operators that we can use to calculate P and Q, and if needed recalculate a lost data block from the remaining data blocks and parities. And, each of P and Q perfectly fits into a byte. The resulting coding is called Reed-Solomon error correction, and that’s the method used by RAID 6 configuration:

Final Comments

The article explains the overall approach to implementing a RAID-6 storage scheme based on GF(28). It is possible to use other Galois fields, such as the 16-bit GF(216), but the 8-bit field is most often used in practice.

Also, it is possible to calculate additional parities to protect against additional device failures:

R = 12a + 22B + 32C + 42D + 52E

S = 13a + 23B + 33C + 43D + 53E

…

Finally, the examples in this article used 5 data blocks per stripe, but that’s an arbitrary choice – any number works, as far as the parity calculation is concerned.

Related Reading

The mathematics of RAID-6, H. Peter Anvin.

Do you know whether this inequality is true?

![]()

It’s a simple question, but with an intriguing and revealing answer.

Infinity #1

One concept of infinity that most people would have encountered in a math class is the infinity of limits. With limits, we can try to understand 2∞ as follows:

![]()

The infinity symbol is used twice here: first time to represent “as x grows”, and a second to time to represent “2x eventually permanently exceeds any specific bound”.

If we use the notation a bit loosely, we could “simplify” the limit above as follows:

![]()

This would suggest that the answer to the question in the title is “No”, but as will be apparent shortly, using infinity notation loosely is not a good idea.

Infinity #2

In addition to limits, there is another place in mathematics where infinity is important: in set theory.

Set theory recognizes infinities of multiple “sizes”, the smallest of which is the set of positive integers: { 1, 2, 3, … }. A set whose size is equal to the size of positive integer set is called countably infinite.

- “Countable infinity plus one”

If we add another element (say 0) to the set of positive integers, is the new set any larger? To see that it cannot be larger, you can look at the problem differently: in set { 0, 1, 2, … } each element is simply smaller by one, compared to the set { 1, 2, 3, … }. So, even though we added an element to the infinite set, we really just “relabeled” the elements by decrementing every value.

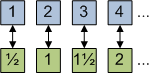

- “Two times countable infinity”

Now, let’s “double” the set of positive integers by adding values 0.5, 1.5, 2.5, … The new set might seem larger, since it contains an infinite number of new values. But again, you can say that the sets are the same size, just each element is half the size:

- “Countable infinity squared”

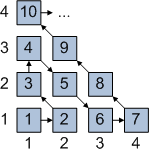

To “square” countable infinity, we can form a set that will contain all integer pairs, such as [1,1], [1,2], [2,2] and so on. By pairing up every integer with every integer, we are effectively squaring the size of the integer set.Can pairs of integers also be basically just relabeled with integers? Yes, they can, and so the set of integer pairs is no larger than the set of integers. The diagram below shows how integer pairs can be “relabeled” with ordinary integers (e.g., pair [2,2] is labeled as 5):

- “Two to the power of countable infinity”

The set of integers contains a countable infinity of elements, and so the set of all integer subsets should – loosely speaking – contain two to the power of countable infinity elements. So, is the number of integer subsets equal to the number of integers? It turns out that the “relabeling” trick we used in the first three examples does not work here, and so it appears that there are more integer subsets than there are integers.

Let’s look at the fourth example in more detail to understand why it is so fundamentally different from the first three. You can think of an integer subset as a binary number with an infinite sequence of digits: i-th digit is 1 if i is included in the subset and 0 if i is excluded. So, a typical integer subset is a sequence of ones and zeros going forever and ever, with no pattern emerging.

And now we are getting to the key difference. Every integer, half-integer, or integer pair can be described using a finite number of bits. That’s why we can squint at the set of integer pairs and see that it really is just a set of integers. Each integer pair can be easily converted to an integer and back.

However, an integer subset is an infinite sequence of bits. It is impossible to describe a general scheme for converting an infinite sequence of bits into a finite sequence without information loss. That is why it is impossible to squint at the set of integer subsets and argue that it really is just a set of integers.

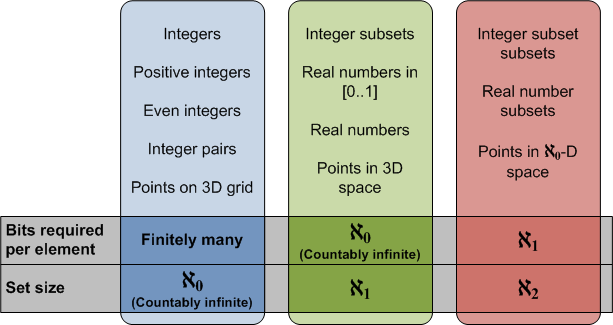

The diagram below shows examples of infinite sets of three different sizes:

So, in set theory, there are multiple infinities. The smallest infinity is the “countable” infinity, ![]() 0, that matches the number of integers. A larger infinity is

0, that matches the number of integers. A larger infinity is ![]() 1 that matches the number of real numbers or integer subsets. And there are even larger and larger infinite sets.

1 that matches the number of real numbers or integer subsets. And there are even larger and larger infinite sets.

Since there are more integer subsets than there are integers, it should not be surprising that the mathematical formula below holds (you can find the formula in the Wikipedia article on Continuum Hypothesis):

![]()

And since ![]() 0 denotes infinity (the smallest kind), it seems that it would not be much of a stretch to write this:

0 denotes infinity (the smallest kind), it seems that it would not be much of a stretch to write this:

![]()

… and now it seems that the answer to the question from the title should be “Yes”.

The answer

So, is it true that that 2∞ > ∞? The answer depends on which notion of infinity we use. The infinity of limits has no size concept, and the formula would be false. The infinity of set theory does have a size concept and the formula would be kind of true.

Technically, statement 2∞ > ∞ is neither true nor false. Due to the ambiguous notation, it is impossible to tell which concept of infinity is being used, and consequently which rules apply.

Who cares?

OK… but why would anyone care that there are two different notions of infinity? It is easy to get the impression that the discussion is just an intellectual exercise with no practical implications.

On the contrary, rigorous understanding of the two kinds of infinity has been very important. After properly understanding the first kind of infinity, Isaac Newton was able to develop calculus, followed by the theory of gravity. And, the second kind of infinity was a pre-requisite for Alan Turing to define computability (see my article on Numbers that cannot be computed) and Kurt Gödel to prove Gödel’s Incompleteness Theorem.

So, understanding both kinds of infinity has lead to important insights and practical advancements.

If you had to come up with a way to represent signed integers in 32-bits, how would you do it?

One simple solution would be to use one bit to represent the sign, and the remaining 31 bits to represent the absolute value of the number. But as many intuitive solutions, this one is not very good. One problem is that adding and multiplying these integers would be somewhat tricky, because there are four cases to handle due to signs of the inputs. Another problem is that zero can be represented in two ways: as positive zero and as negative zero.

Computers use a more elegant representation of signed integers that is obviously “correct” as soon as you understand how it works.

Clock arithmetic

Before we get to the signed integer representation, we need to briefly talk about “clock arithmetic”.

Clock arithmetic is a bit different from ordinary arithmetic. On a 12-hour clock, moving 2 hours forward is equivalent to moving 14 hours forward or 10 hours backward:

![]() + 2 hours =

+ 2 hours = ![]()

![]() + 14 hours =

+ 14 hours = ![]()

![]() – 10 hours =

– 10 hours = ![]()

In the “clock arithmetic”, 2, 14 and –10 are just three different ways to write down the same number.

They are interchangeable in multiplications too:

![]() + (3 * 2) hours =

+ (3 * 2) hours = ![]()

![]() + (3 * 14) hours =

+ (3 * 14) hours = ![]()

![]() + (3 * -10) hours =

+ (3 * -10) hours = ![]()

A more formal term for “clock arithmetic” is “modular arithmetic”. In modular arithmetic, two numbers are equivalent if they leave the same non-negative remainder when divided by a particular number. Numbers 2, 14 and –10 all leave remainder of 2 when divided by 12, and so they are all “equivalent”. In math terminology, numbers 2, 14 and –10 are congruent modulo 12.

Fixed-width binary arithmetic

Internally, processors represent integers using a fixed number of bits: say 32 or 64. And, additions, subtractions and multiplications of these integers are based on modular arithmetic.

As a simplified example, let’s consider 3-bit integers, which can represent integers from 0 to 7. If you add or multiply two of these 3-bit numbers in fixed-width binary arithmetic, you’ll get the “modular arithmetic” answer:

1 + 2 –> 3

4 + 5 -> 1

The calculations wrap around, because any answer larger than 7 cannot be represented with 3 bits. The wrapped-around answer is still meaningful:

- The answer we got is congruent (i.e., equivalent) to the real answer, modulo 8

This is the modular arithmetic! The real answer was 9, but we got 1. And, both 9 and 1 leave remainder 1 when divided by 8. - The answer we got represents the lowest 3 bits of the correct answer

For 4+5, we got 001, while the correct answer is 1001.

One good way to think about this is to imagine the infinite number line:

![]()

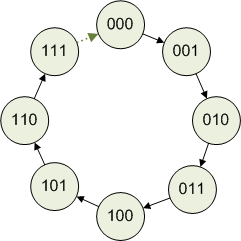

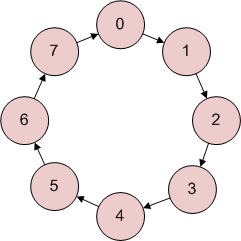

Then, curl the number line into a circle so that 1000 overlaps the 000:

In 3-bit arithmetic, additions, subtractions and multiplications work just they do in ordinary arithmetic, except that they move along a closed ring of 8 numbers, rather than along the infinite number line. Otherwise, mostly the same rules apply: 0+a=a, 1*a=a, a+b=b+a, a*(b+c)=a*b+a*c, and so on.

Additions and multiplications modulo a power of two are convenient to implement in hardware. An adder that computes c = a + b for 3-bit integers can be implemented like this:

c0 = (a0 XOR b0) c1 = (a1 XOR b1) XOR (a0 AND b0) c2 = (a2 XOR b2) XOR ((a1 AND b1) OR ((a1 XOR b1) AND (a0 AND b0)))

Binary arithmetic and signs

Now comes the cool part: the adder for unsigned integers can be used for signed integers too, exactly as it is! Similarly, a multiplier for unsigned integers works for signed integers too. (Division needs to be handled separately, though.)

Recall that the adder I showed works in modular arithmetic. The adder represents integers as 3-bit values from 000 to 111. You can interpret those eight values as signed or unsigned:

| Binary | Unsigned value | Signed value |

| 000 | 0 | 0 |

| 001 | 1 | 1 |

| 010 | 2 | 2 |

| 011 | 3 | 3 |

| 100 | 4 | -4 |

| 101 | 5 | -3 |

| 110 | 6 | -2 |

| 111 | 7 | -1 |

Notice that the signed value and the unsigned value are congruent modulo 8, and so equivalent as far as the adder is concerned. For example, 101 means either 5 or –3. On a ring of size 8, moving 5 numbers forward is equivalent to moving 3 numbers backwards.

The 3-bit adder and multiplier work in the 3-bit binary arithmetic, not in ‘actual’ integers. It is up to you whether you interpret their inputs and outputs as unsigned integers (the left ring), or as signed integers (the right ring):

(Of course, you could also decide that the eight values should represent say [0, 1, –6, –5, –4, –3, –2, -1].)

Let’s take a look at an example. In unsigned 3-bit integers, we can compute the following:

6 + 7 –> 5

In signed 3-bit integers, the computation comes out like this:

(-2) + (-1) –> -3

In 3-bit arithmetic, the computation is the same in both the signed and the unsigned case:

110 + 111 –> 101

Two’s complement

The representation of signed integers that I showed above is the representation used by modern processors. It is called “two’s complement” because to negate an integer, you subtract it from 2N. For example, to get the representation of –2 in 3-bit arithmetic, you can compute 8 – 2 = 6, and so –2 is represented in two’s complement as 6 in binary: 110.

This is another way to compute two’s complement, which is easier to imagine implemented in hardware:

- Start with a binary representation of the number you need to negate

- Flip all bits

- Add one

The reason why this works is that flipping bits is equivalent to subtracting the number from (2N – 1). We actually need to subtract the number from 2N, and step 3 compensates for that – 1.

Modern processors and two’s complement

Today’s processors represent signed integers using two’s complement.

To see that, you can compare the x86 assembly emitted by a compiler for signed and unsigned integers. Here is a C# program that multiplies two signed integers:

int a = int.Parse(Console.ReadLine()); int b = int.Parse(Console.ReadLine()); Console.WriteLine(a * b);

The JIT compiler will use the IMUL instruction to compute a * b:

0000004f call 79084EA0

00000054 mov ecx,eax

00000056 imul esi,edi

00000059 mov edx,esi

0000005b mov eax,dword ptr [ecx]

For comparison, I can change the program to use unsigned integers (uint):

uint a = uint.Parse(Console.ReadLine()); uint b = uint.Parse(Console.ReadLine()); Console.WriteLine(a * b);

The JIT compiler will still use the IMUL instruction to compute a * b:

0000004f call 79084EA0

00000054 mov ecx,eax

00000056 imul esi,edi

00000059 mov edx,esi

0000005b mov eax,dword ptr [ecx]

The IMUL instruction does not know whether its arguments are signed or unsigned, and it can still multiply them correctly!

If you do look up IMUL instruction (say in the Intel Reference Manual), you’ll find that IMUL is the instruction for signed multiplication. And, there is another instruction for unsigned multiplication, MUL. How can there be separate instructions for signed and unsigned multiplications, now that I spent so much time arguing that the two kinds of multiplications are the same?

It turns out that there is actually a small difference between signed and unsigned multiplication: detection of overflow. In 3-bit arithmetic, the multiplication –1 * –2 -> 2 produces the correct answer. But, the equivalent unsigned multiplication 7 * 6 –> 2 produces an answer that is only correct in modular arithmetic, not “actually” correct.

So, MUL and IMUL behave the same way, except for their effect on the overflow processor flag. If your program does not check for overflow (and C# does not, by default) the instructions can be used interchangeably.

Wrapping up

Hopefully this article helps you understand how are signed integers represented inside the computer. With this knowledge under your belt, various behaviors of integers should be easier to understand.

Read more of my articles:

Fast and slow if-statements: branch prediction in modern processors

Gallery of processor cache effects

How GPU came to be used for general computation

Self-printing Game of Life in C#

And if you like my blog, subscribe!

Imagine that 90,000 years ago, every man alive at the time picked a different last name. Assuming that last names are inherited from father to son, how many different last names do you think there would be today?

It turns out that there would be only one last name!

Similarly, imagine that 200,000 years ago, every woman alive picked a different secret word, and told the secret word to her daughters. And, the female descendants would follow this tradition – a mother would always pass her secret word to her daughters.

As you might guess, today there would be only one secret word in circulation.

The man whose last name all men would carry is called “Y-chromosomal Adam” and the woman whose secret word all women would know is “Mitochondrial Eve”. The names come from the fact that Y chromosome is a piece of DNA inherited from father to son (just like last names), and mitochondrial DNA is inherited from mother to children (just like the hypothetical secret words).

Why the convergence?

So, how likely is it that one last name and one secret word will eventually come to dominate? Given enough time, it is virtually guaranteed, under some assumptions (e.g., the population does not become separated).

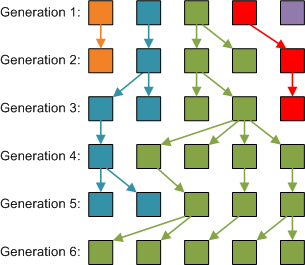

Here is a simulation of how last names of five men could flow through six generations:

After six generations, the last name shown as green is the only last name around, purely by chance! In biology, this random effect is called genetic drift.

And, the convergence does not only happen for small populations. Here are the numbers that I got by simulating different population sizes:

| Population | Generations to convergence |

| 10 | 23 |

| 50 | 73 |

| 100 | 239 |

| 500 | 891 |

| 1000 | 1395 |

| 5000 | 7312 |

| 10000 | 13491 |

Here is the implementation of this simulation:

static int MitochondrialEve(int populationSize) { Random random = new Random(); int generations = 0; int[] cur = new int[populationSize]; for (int i = 0; i < populationSize; i++) cur[i] = i; for ( ; cur.Max() != cur.Min(); generations++) { int[] next = new int[populationSize]; for (int i = 0; i < next.Length; i++) { next[i] = cur[random.Next(populationSize)]; } cur = next; } return generations; }

This simulation is not a sophisticated model of a human population, but it is sufficient for the purposes of illustrating genetic drift. It assumes that every man has on average one son, with a standard deviation of roughly one, and a binomial probability distribution.

By the way, the Y-chromosomal Adam lived roughly 60,000–90,000 years ago, while Eve lived roughly 200,000 years ago. The reason why genetic drift acted faster for men is that men have a larger variation in the number of offspring – one man can have many more children than one woman.

UPDATE: Based on comments at Hacker News and Reddit, some readers are dissatisfied with the assumption of a fixed population size. Of course, human population grew over the ages, but there were also periods when it shrank, sometimes a lot. For example, roughly 70,000 years ago, the human population may have dropped down to thousands of individuals (1, 2). So, a fixed population size is a reasonable simplification for my example.

The roles of Mitochondrial Eve and Y-chromosomal Adam

One noteworthy fact about Mitochondrial Eve and Y-chromosomal Adam is that their positions in the history are not as special as they may first appear. Let’s take a look at this in more depth.

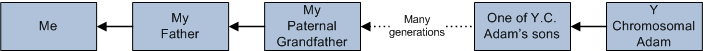

Tracing from me, I can follow a path of paternal ancestry all the way to Y-chromosomal Adam:

If I only trace the male ancestry, there is exactly one path that starts at me, and that path leads to Y-chromosomal Adam. You could start the diagram at any man alive today and you’d get a similar picture, with the lineage finally reaching the Y-chromosomal Adam.

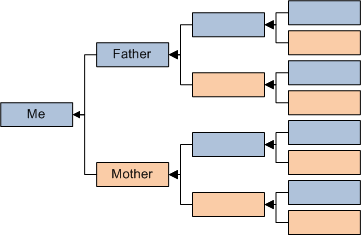

However, if I trace ancestry via both parents, the number of ancestors explodes. I have two parents, four grandparents, eight great grandparents, and so forth. The number of ancestors in each generation grows exponentially, although that cannot continue for long. If all ancestors in each generations were distinct, I would have more than 1 billion ancestors just 30 generations back, so the tree certainly has to start collapsing into a directed acyclic graph by then.

Tracing ancestry through both parents, there are many paths to follow, and each generation of ancestors contains a lot of people. Some of those paths will reach Y-chromosomal Adam, but other paths will reach other men in his generation. Similarly, some paths will reach Mitochondrial Eve, but other paths will reach other women in her generation.

Most recent common ancestor

So, Mitochondrial Eve only has a special position with respect to the mother-daughter relationships, and Y-chromosomal Adam only with respect to the father-son relationships.

What if you consider all types of ancestry, father-son, father-daughter, mother-son and mother-daughter? In the resulting directed acyclic graph, neither the Y-chomosomal Adam nor the Mitochondrial Eve appear in a special position. In fact, in the combined graph, the most recent ancestor of all today’s people lived much later than Y-chromosomal Adam. The most recent ancestor is estimated to have lived roughly 15,000 to 5,000 years ago.

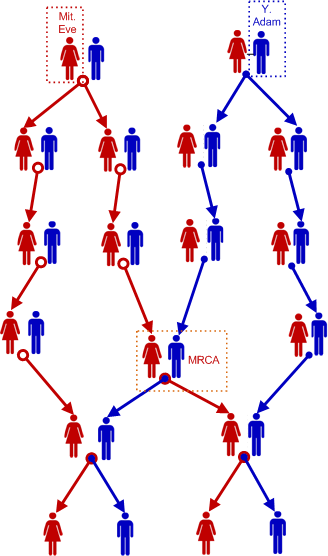

One way to visualize the relationship between Mitochondrial Eve, Y-chromosomal Adam, and the Most Recent Common Ancestor (MRCA) is to look at a small genealogy diagram with just a few people:

For the last generation consisting of just four people, this graph shows the Mitochondrial Eve, the Y-chromosomal Adam, and the most recent common ancestors (a couple in this example, but could also be one man or one woman). Adam is at the root of the blue tree, Eve is at the root of the red tree, and the most recent common ancestors are much lower in the graph.

The dating of Y-Adam and M-Eve

Finally, I’ll briefly give you an idea on how biologists calculate when Mitochondrial Eve and Y-chromosomal Adam lived.

The dating is based on DNA analysis. Changes in DNA accumulate at a certain rate that depends on various factors – region of the DNA, the species, population size, etc. To date Mitochondrial Eve, biologists calculate an estimate of the mitochondrial DNA (mtDNA) mutation rate. Then, they look at how much mtDNA varies between today’s women, and then calculate how long it would take to achieve that degree of variation.

Another interesting fact is that the titles of Mitochondrial Eve and Y-chromosomal Adam are not permanent, but instead are reassigned over time. For example, the woman who we call “Mitochondrial Eve” today did not hold that title during her lifetime. Instead, there was another unknown woman who was the most recent common matrilineal ancestor of all women alive at Eve’s time.

Final words

I hope you enjoyed the article. I originally learned about Y-chromosomal Adam and Mitochondrial Eve from reading Before the Dawn, and immediately knew I had to blog about them from a programmer’s perspective. If you want to read more on the topic, the Wikipedia page on Mitochondrial Eve is a good start.

Read more of my articles:

Fast and slow if statements: branch prediction in modern processors

Human heart is a Turing machine, research on XBox 360 shows. Wait, what?

Self-printing Game of Life in C#

And if you like my blog, subscribe!

Did you know that the performance of an if-statement depends on whether its condition has a predictable pattern? If the condition is always true or always false, the branch prediction logic in the processor will pick up the pattern. On the other hand, if the pattern is unpredictable, the if-statement will be much more expensive. In this article, I’ll explain why today’s processors behave this way.

Let’s measure the performance of this loop with different conditions:

for (int i = 0; i < max; i++) if (<condition>) sum++;

Here are the timings of the loop with different True-False patterns:

| Condition | Pattern | Time (ms) |

| (i & 0x80000000) == 0 | T repeated | 322 |

| (i & 0xffffffff) == 0 | F repeated | 276 |

| (i & 1) == 0 | TF alternating | 760 |

| (i & 3) == 0 | TFFFTFFF… | 513 |

| (i & 2) == 0 | TTFFTTFF… | 1675 |

| (i & 4) == 0 | TTTTFFFFTTTTFFFF… | 1275 |

| (i & 8) == 0 | 8T 8F 8T 8F … | 752 |

| (i & 16) == 0 | 16T 16F 16T 16F … | 490 |

A “bad” true-false pattern can make an if-statement up to six times slower than a “good” pattern! Of course, which pattern is good and which is bad depends on the exact instructions generated by the compiler and on the specific processor.

Let’s look at the processor counters

One way to understand how the processor used its time is to look at the hardware counters. To help with performance tuning, modern processors track various counters as they execute code: the number of instructions executed, the number of various types of memory accesses, the number of branches encountered, and so forth. To read the counters, you’ll need a tool such as the profiler in Visual Studio 2010 Premium or Ultimate, AMD Code Analyst or Intel VTune.

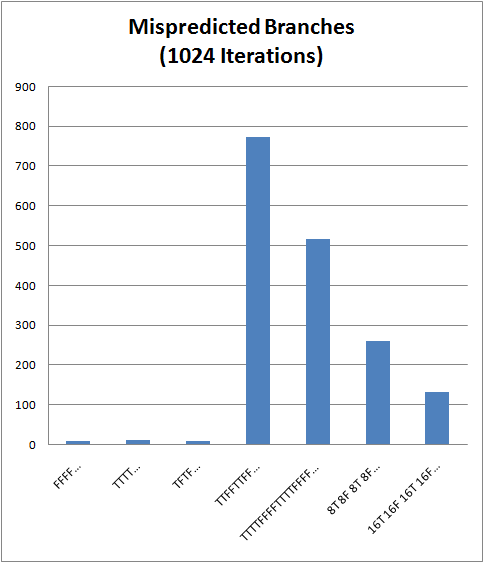

To verify that the slowdowns we observed were really due to the if-statement performance, we can look at the Mispredicted Branches counter:

The worst pattern (TTFFTTFF…) results in 774 branch mispredictions, while the good patterns only get around 10. No wonder that the bad case took the longest 1.67 seconds, while the good patterns only took around 300ms!

Let’s take a look at what “branch prediction” does, and why it has a major impact on the processor performance.

What’s the role of branch prediction?

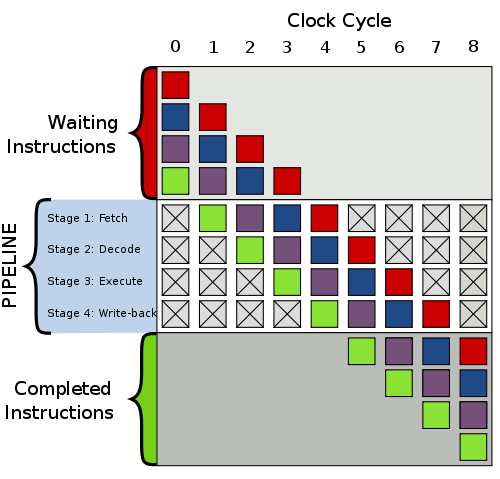

To explain what branch prediction is and why it impacts the performance numbers, we first need to take a look at how modern processors work. To complete each instruction, the CPU goes through these (and more) stages:

1. Fetch: Read the next instruction.

2. Decode: Determine the meaning of the instruction.

3. Execute: Perform the real ‘work’ of the instruction.

4. Write-back: Store results into memory.

An important optimization is that the stages of the pipeline can process different instructions at the same time. So, as one instruction is getting fetched, a second one is being decoded, a third is executing and the results of fourth are getting written back. Modern processors have pipelines with 10 – 31 stages (e.g., Pentium 4 Prescott has 31 stages), and for optimum performance, it is very important to keep all stages as busy as possible.

Image from http://commons.wikimedia.org/wiki/File:Pipeline,_4_stage.svg

Branches (i.e. conditional jumps) present a difficulty for the processor pipeline. After fetching a branch instruction, the processor needs to fetch the next instruction. But, there are two possible “next” instructions! The processor won’t be sure which instruction is the next one until the branching instruction makes it to the end of the pipeline.

Instead of stalling the pipeline until the branching instruction is fully executed, modern processors attempt to predict whether the jump will or will not be taken. Then, the processor can fetch the instruction that it thinks is the next one. If the prediction turns out wrong, the processor will simply discard the partially executed instructions that are in the pipeline. See the Wikipedia page on branch predictor implementation for some typical techniques used by processors to collect and interpret branch statistics.

Modern branch predictors are good at predicting simple patterns: all true, all false, true-false alternating, and so on. But if the pattern happens to be something that throws off the branch predictor, the performance hit will be significant. Thankfully, most branches have easily predictable patterns, like the two examples highlighted below:

int SumArray(int[] array) { if (array == null) throw new ArgumentNullException("array"); int sum=0; for(int i=0; i<array.Length; i++) sum += array[i]; return sum; }

The first highlighted condition validates the input, and so the branch will be taken only very rarely. The second highlighted condition is a loop termination condition. This will also almost always go one way, unless the arrays processed are extremely short. So, in these cases – as in most cases – the processor branch prediction logic will be effective at preventing stalls in the processor pipeline.

Updates and Clarifications

This article got picked up by reddit, and got a fair bit of attention in the reddit comments. I’ll respond to the questions, comments and criticisms below.

First, regarding the comments that optimizing for branch prediction is generally a bad idea: I agree. I do not argue anywhere in the article that you should try to write your code to optimize for branch prediction. For the vast majority of high-level code, I can’t even imagine how you’d do that.

Second, there was a concern whether the executed instructions for different cases differ in something else other than the constant value. They don’t – I looked at the JIT-ted assembly. If you’d like to see the JIT-ted assembly code or the C# source code, send me an email and I’ll send them back. (I am not posting the code here because I don’t want to blow up this update.)

Third, another question was on the surprisingly poor performance of the TTFF* pattern. The TTFF* pattern has a short period, and as such should be an easy case for the branch prediction algorithms.

However, the problem is that modern processors don’t track history for each branching instruction separately. Instead, they either track global history of all branches, or they have several history slots, each potentially shared by multiple branching instructions. Or, they can use some combination of these tricks, together with other techniques.

So, the TTFF pattern in the if-statement may not be TTFF by the time it gets to the branch predictor. It may get interleaved with other branches (there are 2 branching instructions in the body of a for-loop), and possibly approximated in other ways too. But, I don’t claim to be an expert on what precisely each processor does, and if someone reading this has an authoritative reference to how different processors behave (esp. Intel Core2 that I tested on), please let me know in comments.

Read more of my articles:

Gallery of processor cache effects

How GPU came to be used for general computation

What really happens when you navigate to a URL

Self-printing Game of Life in C#

And if you like my blog, subscribe!

Sometimes you need to access private fields and call private methods on an object – for testing, experimentation, or to work around issues in third-party libraries.

.NET has long provided a solution to this problem: reflection. Reflection allows you to call private methods and read or write private fields from outside of the class, but is verbose and messy to write. In C# 4, this problem can be solved in a neat way using dynamic typing.

As a simple example, I’ll show you how to access internals of the List<T> class from the BCL standard library. Let’s create an instance of the List<int> type:

List<int> realList = new List<int>();

To access the internals of List<int>, we’ll wrap it with ExposedObject – a type I’ll show you how to implement:

dynamic exposedList = ExposedObject.From(realList);

And now, via the exposedList object, we can access the private fields and methods of the List<> class:

// Read a private field - prints 0 Console.WriteLine(exposedList._size); // Modify a private field exposedList._items = new int[] { 5, 4, 3, 2, 1 }; // Modify another private field exposedList._size = 5; // Call a private method exposedList.EnsureCapacity(20);

Of course, _size, _items and EnsureCapacity() are all private members of the List<T> class! In addition to the private members, you can still access the public members of the exposed list:

// Add a value to the list exposedList.Add(0); // Enumerate the list. Prints "5 4 3 2 1 0" foreach (var x in exposedList) Console.WriteLine(x);

Pretty cool, isn’t it?

How does ExposedObject work?

The example I showed uses ExposedObject to access private fields and methods of the List<T> class. ExposedObject is a type I implemented using the dynamic typing feature in C# 4.

To create a dynamically-typed object in C#, you need to implement a class derived from DynamicObject. The derived class will implement a couple of methods whose role is to decide what to do whenever a method gets called or a property gets accessed on your dynamic object.

Here is a dynamic type that will print the name of any method you call on it:

class PrintingObject : DynamicObject { public override bool TryInvokeMember( InvokeMemberBinder binder, object[] args, out object result) { Console.WriteLine(binder.Name); // Print the name of the called method result = null; return true; } }

Let’s test it out. This program prints “HelloWorld” to the console:

class Program { static void Main(string[] args) { dynamic printing = new PrintingObject(); printing.HelloWorld(); return; } }

There is only a small step from PrintingObject to ExposedObject – instead of printing the name of the method, ExposedObject will find the appropriate method and invoke it via reflection. A simple version of the code looks like this:

class ExposedObjectSimple : DynamicObject { private object m_object; public ExposedObjectSimple(object obj) { m_object = obj; } public override bool TryInvokeMember( InvokeMemberBinder binder, object[] args, out object result) { // Find the called method using reflection var methodInfo = m_object.GetType().GetMethod( binder.Name, BindingFlags.NonPublic | BindingFlags.Public | BindingFlags.Instance); // Call the method result = methodInfo.Invoke(m_object, args); return true; } }

This is all you need in order to implement a simple version of ExposedObject.

What else can you do with ExposedObject?

The ExposedObjectSimple implementation above illustrates the concept, but it is a bit naive – it does not handle field accesses, multiple methods with the same name but different argument lists, generic methods, static methods, and so on. I implemented a more complete version of the idea and published it as a CodePlex project: http://exposedobject.codeplex.com/

You can call static methods by creating an ExposedClass over the class. For example, let’s call the private File.InternalExists() static method:

dynamic fileType = ExposedClass.From(typeof(System.IO.File)); bool exists = fileType.InternalExists("somefile.txt");

You can call generic methods (static or non-static) too:

dynamic enumerableType = ExposedClass.From(typeof(System.Linq.Enumerable)); Console.WriteLine( enumerableType.Max<int>(new[] { 1, 3, 5, 3, 1 }));

Type inference for generics works too. You don’t have to specify the int generic argument in the Max method call:

dynamic enumerableType = ExposedClass.From(typeof(System.Linq.Enumerable)); Console.WriteLine( enumerableType.Max(new[] { 1, 3, 5, 3, 1 }));

And to clarify, all of these different cases have to be explicitly handled. Deciding which method should be called is normally done by the compiler. The logic on overload resolution is hidden away in the compiler, and so unavailable at runtime. To duplicate the method binding rules, we have to duplicate the logic of the compiler.

You can also cast a dynamic object either to its own type, or to a base class:

List<int> realList = new List<int>(); dynamic exposedList = ExposedObject.From(realList); List<int> realList2 = exposedList; Console.WriteLine(realList == realList2); // Prints "true"

So, after casting the exposed object (or assigning it into an appropriately typed variable), you can get back the original object!

Updates

Based on some of the responses the article is getting, I should clarify: I am definitely not suggesting that you use ExposedObject regularly in your production code! That would be a terrible idea.

As I said in the article, ExposedObject can be useful for testing, experimentation, and rare hacks. Also, it is an interesting example of how dynamic typing works in C#.

Also, apparently Mauricio Scheffer came up with the same idea almost a year before me.

Read more of my articles:

Gallery of processor cache effects

What really happens when you navigate to a URL

Human heart is a Turing machine, research on XBox 360 shows. Wait, what?

And if you like my blog, subscribe!

The story of how GPU came to be used for high-performance computation is pretty cool. Hardware heavily optimized for graphics turned out to be useful for another use: certain types of high-performance computations. In this article, I will explore how and why this happened, and summarize the state of general computation on GPUs today.

Programmable graphics

The first step towards computation on the GPU was introduction of programmable shaders. Both DirectX and OpenGL added support for programmable shaders roughly a decade ago, giving game designers more freedom to create custom graphics effects. Instead of just composing pre-canned effects, graphic artists can now write little programs that execute directly on the GPU. As of DirectX 8, they can specify two types of shader programs for every object in the scene: a vertex shader and a pixel shader.

A vertex shader is a function invoked on every vertex in the 3D object. The function transforms the vertex and returns its position relative to the camera view. By transforming vertices, vertex shaders help implement realistic skin, clothes, facial expressions, and similar effects.

A pixel shader is a function invoked on every pixel covered by a particular object and returns the color of the pixel. To compute the output color, the pixel shader can use a variety of optional inputs: XY-position on the screen, XYZ-position in the scene, position in the texture, the direction of the surface (i.e., the normal vector), etc. Pixel shader can also read textures, bump maps, and other inputs.

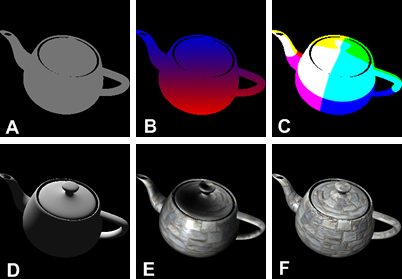

Here is a simple scene, rendered with six different pixel shaders applied to the teapot:

A always returns the same color. B varies the color based on the screen Y-coordinate. C sets the color depending on the XYZ screen coordinates. D sets the color proportionally to the cosine of the angle between the surface normal and the light direction (“diffuse lighting”). E uses a slightly more complex lighting model and a texture, and F also adds a bump map.

If you are curious how lighting shaders are implemented, check out GamaSutra’s Implementing Lighting Models With HLSL.

Realization: shaders can be used for computation!

Let’s take a look at a simple pixel shader that just blurs a texture. This shader is implemented in HLSL (a DirectX shader language):

float4 ps_main( float2 t: TEXCOORD0 ) : COLOR0

{

float dx = 2/fViewportWidth;

float dy = 2/fViewportHeight;

return

0.2 * tex2D( baseMap, t ) +

0.2 * tex2D( baseMap, t + float2(dx, 0) ) +

0.2 * tex2D( baseMap, t + float2(-dx, 0) ) +

0.2 * tex2D( baseMap, t + float2(0, dy) ) +

0.2 * tex2D( baseMap, t + float2(0, -dy) );

}

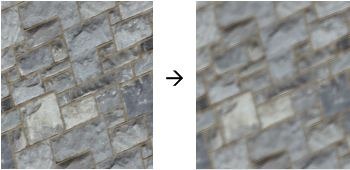

The texture blur has this effect:

This is not exactly a breath-taking effect, but the interesting part is that simulations of car crashes, wind tunnels and weather patterns all follow this basic pattern of computation! All of these simulations are computations on a grid of points. Each point has one or more quantities associated with it: temperature, pressure, velocity, force, air flow, etc. In each iteration of the simulation, the neighboring points interact: temperatures and pressures are equalized, forces are transferred, grid is deformed, and so forth. Mathematically, the programs that run these simulations are partial differential equation (PDE) solvers.

As a trivial example, here is a simple simulation of heat dissipation. In each iteration, the temperature of each grid point is recomputed as an average over its nearest neighbors:

It is hard to overlook the fact that an iteration of this simulation is nearly identical to the blur operation. Hardware highly optimized for running pixel shaders will be able to run this simulation very fast. And, after years of refinement and challenges from latest and greatest games, GPUs became very efficient at using massive parallelism to execute shaders blazingly fast.

One cool example of a PDE solver is a liquid and smoke simulator. The structure of the simulation is similar to my trivial heat dissipation example, but instead of tracking the temperature of each grid point, the smoke simulator tracks pressure and velocity. Just as in the heat dissipation example, a grid point is affected by all of its nearest neighbors in each iteration.

This simulation was developed by Keenan Crane.

For a view into general computation on GPUs in 2004 when hacked-up pixel shaders were the state of the art, see the General-Purpose Computation on GPUs section of GPU Gems 2.

Arrival of GPGPU

Once GPUs have shown themselves to be a good fit for certain types of high-performance computations (like PDEs), GPU manufacturers moved to make GPUs more attractive for general-purpose computing. The idea of General Purpose computation on a GPU (“GPGPU”) became a hot topic in high-performance computing.

GPGPU computing is based around compute kernels. A compute kernel is a generalization of a pixel shader:

- Like a pixel shader, a compute kernel is a routine that will be invoked on each point in the input space.

- A pixel shader always operates on two-dimensional space. A compute kernel can work on space of any dimensionality.

- A pixel shader returns a single color. A compute kernel can write an arbitrary number of outputs.

- A pixel shader operates on 32-bit floating-point numbers. A compute kernel also supports 64-bit floating-point numbers and integers.

- A pixel shader reads from textures. A compute kernel can read from any place in GPU memory.

- Additionally, compute kernels running on the same core can share data via an explicitly managed per-core cache.

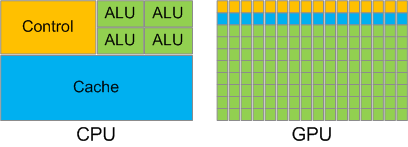

Comparison of a GPU and a CPU

The control flow of a modern application is typically very complicated. Just think about all the different tasks that must be completed to show this article in your browser. To display this blog article, the CPU has to communicate with various devices, maintain thousands of data structures, position thousands of UI elements, parse perhaps a hundred file formats, … that does not even begin to scratch the surface. And, not only are all of these tasks different, they also depend on each other in very complex ways.

Compare that with the control flow of a pixel shader. A pixel shader is a single routine that needs to be invoked roughly a million times, each time on a different input. Different shader invocations are pretty much independent and once all are done, the GPU starts over again with a scene where objects have moved a bit.

It shouldn’t come as a surprise that hardware optimized for running a pixel shader will be quite different from hardware optimized for tasks like viewing web pages. A CPU greatly benefits from a sophisticated execution pipeline with multi-level caches, instruction reordering, prefetching, branch prediction, and other clever optimizations. A GPU does not need most of those complex features for its much simpler control flow. Instead, a GPU benefits from lots of Arithmetic Logic Units (ALUs) to add, multiply and divide floating point numbers in parallel.

This table shows the most important differences between a CPU and a GPU today:

| CPU | GPU |

| 2-4 cores | 16-32 cores |

| Each core runs 1-2 independent threads in parallel | Each core runs 16-32 threads in parallel. All threads on a core must execute the same instruction at any time. |

| Automatically managed hierarchy of caches | Each core has 16-64kB of cache, explicitly managed by the programmer |

| 0.1 billion floating-point operations / second (0.1 TFLOP) | 1 billion floating-point operations / second (1 TFLOP) |

| Main memory throughput: 10GB / sec | GPU memory throughput: 100GB / sec |

All of this means that if a program can be broken up into many threads all doing the same thing on different data (ideally executing arithmetic operations), a GPU will probably be able to do this an order of magnitude faster than a CPU. On the other hand, on an application with a complex control flow, CPU is going to be the one winning by orders of magnitude. Going back to my earlier example, it should be clear why a CPU will excel at running a browser and a GPU will excel at executing a pixel shader.

This chart illustrates how a CPU and a GPU use up their “silicon budget”. A CPU uses most of its transistors for the L1 cache and for execution control. A GPU dedicates the bulk of its transistors to Arithmetic Logic Units (ALUs).

Adapted from NVidia’s CUDA Programming Guide.

NVidia’s upcoming Fermi chip will slightly change the comparison table. Fermi introduces a per-core automatically-managed L1 cache. It will be very interesting to see what kind of impact the introduction of an L1 cache will have on the types of programs that can run on the GPU. One point is fairly clear – the penalty for register spills into main memory will be greatly reduced (this point may not make sense until you read the next section).

GPGPU Programming

Today, writing efficient GPGPU programs requires in-depth understanding of the hardware. There are three popular programming models:

- DirectCompute – Microsoft’s API for defining compute kernels, introduced in DirectX 11

- CUDA – NVidia’s C-based language for programming compute kernels

- OpenCL – API originally proposed by Apple and now developed by Khronos Group

Conceptually, the models are very similar. The table below summarizes some of the terminology differences between the models:

| DirectCompute | CUDA | OpenCL |

| thread | thread | work item |

| thread group | thread block | work group |

| group-shared memory | shared memory | local memory |

| warp? | warp | wavefront |

| barrier | barrier | barrier |

Writing high-performance GPGPU code is not for the faint at heart (although the same could probably be said about any type of high-performance computing). Here are examples of some issues you need to watch out for when writing compute kernels:

- The program has to have plenty of threads (thousands)

- Not too many threads, though, or cores will run out of registers and will have to simulate additional registers using main GPU memory.

- It is important that threads running on one core access main memory in such a pattern that the hardware will be coalesce the memory accesses from different threads. This optimization alone can make an order of magnitude difference.

- … and so on.

Explaining all of these performance topics in detail is well beyond the scope of this article, but hopefully this gives you an idea of what GPGPU programming is about, and what kinds of problems it can be applied to.

Read more of my articles:

Gallery of processor cache effects

What really happens when you navigate to a URL

Human heart is a Turing machine, research on XBox 360 shows. Wait, what?

And if you like my blog, subscribe!

The memory model is a fascinating topic – it touches on hardware, concurrency, compiler optimizations, and even math.

The memory model defines what state a thread may see when it reads a memory location modified by other threads. For example, if one thread updates a regular non-volatile field, it is possible that another thread reading the field will never observe the new value. This program never terminates (in a release build):

As a software developer, you certainly have a high-level picture of how web apps work and what kinds of technologies are involved: the browser, HTTP, HTML, web server, request handlers, and so on.

In this article, we will take a deeper look at the sequence of events that take place when you visit a URL.

1. You enter a URL into the browser

It all starts here:

2. The browser looks up the IP address for the domain name

The first step in the navigation is to figure out the IP address for the visited domain. The DNS lookup proceeds as follows:

Most of my readers will understand that cache is a fast but small type of memory that stores recently accessed memory locations. This description is reasonably accurate, but the “boring” details of how processor caches work can help a lot when trying to understand program performance.

In this blog post, I will use code samples to illustrate various aspects of how caches work, and what is the impact on the performance of real-world programs.

The examples are in C#, but the language choice has little impact on the performance scores and the conclusions they lead to.

Example 1: Memory accesses and performance

How much faster do you expect Loop 2 to run, compared Loop 1?

int[] arr = new int[64 * 1024 * 1024]; Read the rest of this entry »

Recent Comments