The story of how GPU came to be used for high-performance computation is pretty cool. Hardware heavily optimized for graphics turned out to be useful for another use: certain types of high-performance computations. In this article, I will explore how and why this happened, and summarize the state of general computation on GPUs today.

Programmable graphics

The first step towards computation on the GPU was introduction of programmable shaders. Both DirectX and OpenGL added support for programmable shaders roughly a decade ago, giving game designers more freedom to create custom graphics effects. Instead of just composing pre-canned effects, graphic artists can now write little programs that execute directly on the GPU. As of DirectX 8, they can specify two types of shader programs for every object in the scene: a vertex shader and a pixel shader.

A vertex shader is a function invoked on every vertex in the 3D object. The function transforms the vertex and returns its position relative to the camera view. By transforming vertices, vertex shaders help implement realistic skin, clothes, facial expressions, and similar effects.

A pixel shader is a function invoked on every pixel covered by a particular object and returns the color of the pixel. To compute the output color, the pixel shader can use a variety of optional inputs: XY-position on the screen, XYZ-position in the scene, position in the texture, the direction of the surface (i.e., the normal vector), etc. Pixel shader can also read textures, bump maps, and other inputs.

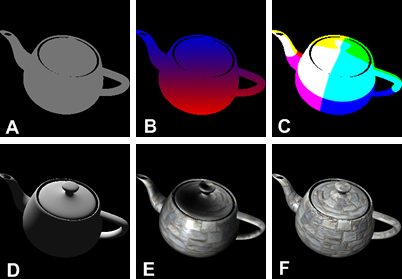

Here is a simple scene, rendered with six different pixel shaders applied to the teapot:

A always returns the same color. B varies the color based on the screen Y-coordinate. C sets the color depending on the XYZ screen coordinates. D sets the color proportionally to the cosine of the angle between the surface normal and the light direction (“diffuse lighting”). E uses a slightly more complex lighting model and a texture, and F also adds a bump map.

If you are curious how lighting shaders are implemented, check out GamaSutra’s Implementing Lighting Models With HLSL.

Realization: shaders can be used for computation!

Let’s take a look at a simple pixel shader that just blurs a texture. This shader is implemented in HLSL (a DirectX shader language):

float4 ps_main( float2 t: TEXCOORD0 ) : COLOR0

{

float dx = 2/fViewportWidth;

float dy = 2/fViewportHeight;

return

0.2 * tex2D( baseMap, t ) +

0.2 * tex2D( baseMap, t + float2(dx, 0) ) +

0.2 * tex2D( baseMap, t + float2(-dx, 0) ) +

0.2 * tex2D( baseMap, t + float2(0, dy) ) +

0.2 * tex2D( baseMap, t + float2(0, -dy) );

}

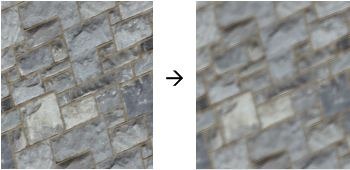

The texture blur has this effect:

This is not exactly a breath-taking effect, but the interesting part is that simulations of car crashes, wind tunnels and weather patterns all follow this basic pattern of computation! All of these simulations are computations on a grid of points. Each point has one or more quantities associated with it: temperature, pressure, velocity, force, air flow, etc. In each iteration of the simulation, the neighboring points interact: temperatures and pressures are equalized, forces are transferred, grid is deformed, and so forth. Mathematically, the programs that run these simulations are partial differential equation (PDE) solvers.

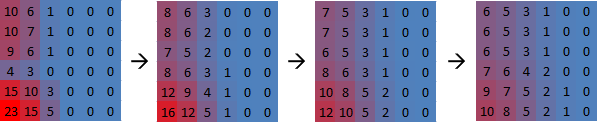

As a trivial example, here is a simple simulation of heat dissipation. In each iteration, the temperature of each grid point is recomputed as an average over its nearest neighbors:

It is hard to overlook the fact that an iteration of this simulation is nearly identical to the blur operation. Hardware highly optimized for running pixel shaders will be able to run this simulation very fast. And, after years of refinement and challenges from latest and greatest games, GPUs became very efficient at using massive parallelism to execute shaders blazingly fast.

One cool example of a PDE solver is a liquid and smoke simulator. The structure of the simulation is similar to my trivial heat dissipation example, but instead of tracking the temperature of each grid point, the smoke simulator tracks pressure and velocity. Just as in the heat dissipation example, a grid point is affected by all of its nearest neighbors in each iteration.

This simulation was developed by Keenan Crane.

For a view into general computation on GPUs in 2004 when hacked-up pixel shaders were the state of the art, see the General-Purpose Computation on GPUs section of GPU Gems 2.

Arrival of GPGPU

Once GPUs have shown themselves to be a good fit for certain types of high-performance computations (like PDEs), GPU manufacturers moved to make GPUs more attractive for general-purpose computing. The idea of General Purpose computation on a GPU (“GPGPU”) became a hot topic in high-performance computing.

GPGPU computing is based around compute kernels. A compute kernel is a generalization of a pixel shader:

- Like a pixel shader, a compute kernel is a routine that will be invoked on each point in the input space.

- A pixel shader always operates on two-dimensional space. A compute kernel can work on space of any dimensionality.

- A pixel shader returns a single color. A compute kernel can write an arbitrary number of outputs.

- A pixel shader operates on 32-bit floating-point numbers. A compute kernel also supports 64-bit floating-point numbers and integers.

- A pixel shader reads from textures. A compute kernel can read from any place in GPU memory.

- Additionally, compute kernels running on the same core can share data via an explicitly managed per-core cache.

Comparison of a GPU and a CPU

The control flow of a modern application is typically very complicated. Just think about all the different tasks that must be completed to show this article in your browser. To display this blog article, the CPU has to communicate with various devices, maintain thousands of data structures, position thousands of UI elements, parse perhaps a hundred file formats, … that does not even begin to scratch the surface. And, not only are all of these tasks different, they also depend on each other in very complex ways.

Compare that with the control flow of a pixel shader. A pixel shader is a single routine that needs to be invoked roughly a million times, each time on a different input. Different shader invocations are pretty much independent and once all are done, the GPU starts over again with a scene where objects have moved a bit.

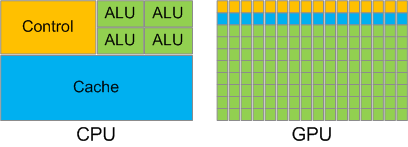

It shouldn’t come as a surprise that hardware optimized for running a pixel shader will be quite different from hardware optimized for tasks like viewing web pages. A CPU greatly benefits from a sophisticated execution pipeline with multi-level caches, instruction reordering, prefetching, branch prediction, and other clever optimizations. A GPU does not need most of those complex features for its much simpler control flow. Instead, a GPU benefits from lots of Arithmetic Logic Units (ALUs) to add, multiply and divide floating point numbers in parallel.

This table shows the most important differences between a CPU and a GPU today:

| CPU | GPU |

| 2-4 cores | 16-32 cores |

| Each core runs 1-2 independent threads in parallel | Each core runs 16-32 threads in parallel. All threads on a core must execute the same instruction at any time. |

| Automatically managed hierarchy of caches | Each core has 16-64kB of cache, explicitly managed by the programmer |

| 0.1 billion floating-point operations / second (0.1 TFLOP) | 1 billion floating-point operations / second (1 TFLOP) |

| Main memory throughput: 10GB / sec | GPU memory throughput: 100GB / sec |

All of this means that if a program can be broken up into many threads all doing the same thing on different data (ideally executing arithmetic operations), a GPU will probably be able to do this an order of magnitude faster than a CPU. On the other hand, on an application with a complex control flow, CPU is going to be the one winning by orders of magnitude. Going back to my earlier example, it should be clear why a CPU will excel at running a browser and a GPU will excel at executing a pixel shader.

This chart illustrates how a CPU and a GPU use up their “silicon budget”. A CPU uses most of its transistors for the L1 cache and for execution control. A GPU dedicates the bulk of its transistors to Arithmetic Logic Units (ALUs).

Adapted from NVidia’s CUDA Programming Guide.

NVidia’s upcoming Fermi chip will slightly change the comparison table. Fermi introduces a per-core automatically-managed L1 cache. It will be very interesting to see what kind of impact the introduction of an L1 cache will have on the types of programs that can run on the GPU. One point is fairly clear – the penalty for register spills into main memory will be greatly reduced (this point may not make sense until you read the next section).

GPGPU Programming

Today, writing efficient GPGPU programs requires in-depth understanding of the hardware. There are three popular programming models:

- DirectCompute – Microsoft’s API for defining compute kernels, introduced in DirectX 11

- CUDA – NVidia’s C-based language for programming compute kernels

- OpenCL – API originally proposed by Apple and now developed by Khronos Group

Conceptually, the models are very similar. The table below summarizes some of the terminology differences between the models:

| DirectCompute | CUDA | OpenCL |

| thread | thread | work item |

| thread group | thread block | work group |

| group-shared memory | shared memory | local memory |

| warp? | warp | wavefront |

| barrier | barrier | barrier |

Writing high-performance GPGPU code is not for the faint at heart (although the same could probably be said about any type of high-performance computing). Here are examples of some issues you need to watch out for when writing compute kernels:

- The program has to have plenty of threads (thousands)

- Not too many threads, though, or cores will run out of registers and will have to simulate additional registers using main GPU memory.

- It is important that threads running on one core access main memory in such a pattern that the hardware will be coalesce the memory accesses from different threads. This optimization alone can make an order of magnitude difference.

- … and so on.

Explaining all of these performance topics in detail is well beyond the scope of this article, but hopefully this gives you an idea of what GPGPU programming is about, and what kinds of problems it can be applied to.

Read more of my articles:

Gallery of processor cache effects

What really happens when you navigate to a URL

Human heart is a Turing machine, research on XBox 360 shows. Wait, what?

And if you like my blog, subscribe!

Very informative article.

1 GFLOP = 1 billion floating point operations / second and…

1 TFLOP = 1000 billion floating point operations / second, right?

Great post,

Could you give a quick example on how to use the nvcc to compile Cuda code. or a link to such example. I find the nvcc documentation to be not easy to understand.

Thanks

[…] How the GPU came to be used for general computation. […]

Great article, little mistake though..

You accidentally put the amount of threads a GPU core can run as the actual core count for current GPU’s. The current highest core count being 1600 from ATI and 480 from Nvidia, on a single GPU chip. (It should be noted though that ATI’s cores aren’t directly comparable to NVidia’s, so while one might think with such a higher core count the ATI card would be fast, it is not. It takes ATI’s cores 3 cycles to do the same thing Nvidia’s do in 1 cycle. Among many other differences.)

I like the words . thank you for posting

Outstanding share it is definitely. We have been seeking for this information.

Hey Igor,

This is a really informative blog and there are many ideas which popped up in my mind.

I recently started working on Parallelizing programs such that they scale for really large datasets. Microsoft .Net has many features which provide easy ways of parallelizing codes. Like PLINQ, PSEQ, DryadLINQ. Extensions like PLINQ exploit parallelism by creating tasks for each chunk of work, these tasks are then executed by threads in the .NET threads-pool. Thousands of tasks are being created and they are then fed to these compute threads. Degree of parallelism of the program largely depends on this compute threads degree of parallelism.

If for example the UDFs( User defined Funtion) in PLINQ queries are pure that is they do not have side-effects then they can be executed in parallel.

I am new to GPGPU but then is it possible that we can run this tasks in the .NET framework on GPU cores and exploit larger degree of parallelism. If these are completely data parallel then I guess it should be possible. I would be really grateful to know your views on this.