Did you know that the performance of an if-statement depends on whether its condition has a predictable pattern? If the condition is always true or always false, the branch prediction logic in the processor will pick up the pattern. On the other hand, if the pattern is unpredictable, the if-statement will be much more expensive. In this article, I’ll explain why today’s processors behave this way.

Let’s measure the performance of this loop with different conditions:

for (int i = 0; i < max; i++) if (<condition>) sum++;

Here are the timings of the loop with different True-False patterns:

| Condition | Pattern | Time (ms) |

| (i & 0x80000000) == 0 | T repeated | 322 |

| (i & 0xffffffff) == 0 | F repeated | 276 |

| (i & 1) == 0 | TF alternating | 760 |

| (i & 3) == 0 | TFFFTFFF… | 513 |

| (i & 2) == 0 | TTFFTTFF… | 1675 |

| (i & 4) == 0 | TTTTFFFFTTTTFFFF… | 1275 |

| (i & 8) == 0 | 8T 8F 8T 8F … | 752 |

| (i & 16) == 0 | 16T 16F 16T 16F … | 490 |

A “bad” true-false pattern can make an if-statement up to six times slower than a “good” pattern! Of course, which pattern is good and which is bad depends on the exact instructions generated by the compiler and on the specific processor.

Let’s look at the processor counters

One way to understand how the processor used its time is to look at the hardware counters. To help with performance tuning, modern processors track various counters as they execute code: the number of instructions executed, the number of various types of memory accesses, the number of branches encountered, and so forth. To read the counters, you’ll need a tool such as the profiler in Visual Studio 2010 Premium or Ultimate, AMD Code Analyst or Intel VTune.

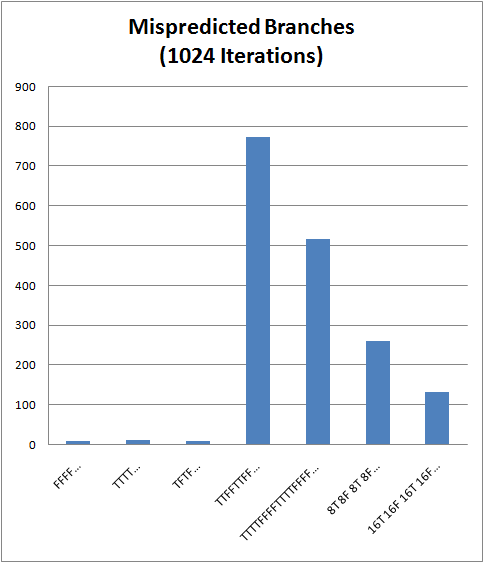

To verify that the slowdowns we observed were really due to the if-statement performance, we can look at the Mispredicted Branches counter:

The worst pattern (TTFFTTFF…) results in 774 branch mispredictions, while the good patterns only get around 10. No wonder that the bad case took the longest 1.67 seconds, while the good patterns only took around 300ms!

Let’s take a look at what “branch prediction” does, and why it has a major impact on the processor performance.

What’s the role of branch prediction?

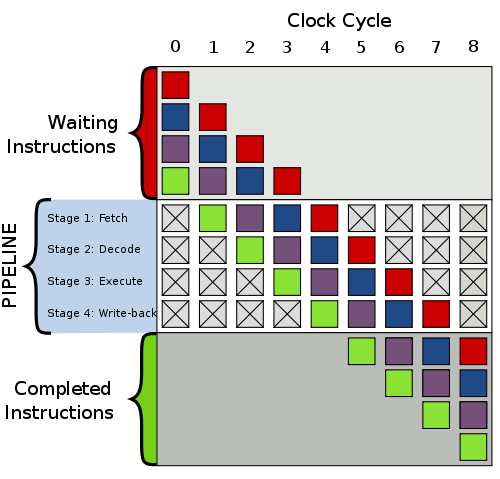

To explain what branch prediction is and why it impacts the performance numbers, we first need to take a look at how modern processors work. To complete each instruction, the CPU goes through these (and more) stages:

1. Fetch: Read the next instruction.

2. Decode: Determine the meaning of the instruction.

3. Execute: Perform the real ‘work’ of the instruction.

4. Write-back: Store results into memory.

An important optimization is that the stages of the pipeline can process different instructions at the same time. So, as one instruction is getting fetched, a second one is being decoded, a third is executing and the results of fourth are getting written back. Modern processors have pipelines with 10 – 31 stages (e.g., Pentium 4 Prescott has 31 stages), and for optimum performance, it is very important to keep all stages as busy as possible.

Image from http://commons.wikimedia.org/wiki/File:Pipeline,_4_stage.svg

Branches (i.e. conditional jumps) present a difficulty for the processor pipeline. After fetching a branch instruction, the processor needs to fetch the next instruction. But, there are two possible “next” instructions! The processor won’t be sure which instruction is the next one until the branching instruction makes it to the end of the pipeline.

Instead of stalling the pipeline until the branching instruction is fully executed, modern processors attempt to predict whether the jump will or will not be taken. Then, the processor can fetch the instruction that it thinks is the next one. If the prediction turns out wrong, the processor will simply discard the partially executed instructions that are in the pipeline. See the Wikipedia page on branch predictor implementation for some typical techniques used by processors to collect and interpret branch statistics.

Modern branch predictors are good at predicting simple patterns: all true, all false, true-false alternating, and so on. But if the pattern happens to be something that throws off the branch predictor, the performance hit will be significant. Thankfully, most branches have easily predictable patterns, like the two examples highlighted below:

int SumArray(int[] array) { if (array == null) throw new ArgumentNullException("array"); int sum=0; for(int i=0; i<array.Length; i++) sum += array[i]; return sum; }

The first highlighted condition validates the input, and so the branch will be taken only very rarely. The second highlighted condition is a loop termination condition. This will also almost always go one way, unless the arrays processed are extremely short. So, in these cases – as in most cases – the processor branch prediction logic will be effective at preventing stalls in the processor pipeline.

Updates and Clarifications

This article got picked up by reddit, and got a fair bit of attention in the reddit comments. I’ll respond to the questions, comments and criticisms below.

First, regarding the comments that optimizing for branch prediction is generally a bad idea: I agree. I do not argue anywhere in the article that you should try to write your code to optimize for branch prediction. For the vast majority of high-level code, I can’t even imagine how you’d do that.

Second, there was a concern whether the executed instructions for different cases differ in something else other than the constant value. They don’t – I looked at the JIT-ted assembly. If you’d like to see the JIT-ted assembly code or the C# source code, send me an email and I’ll send them back. (I am not posting the code here because I don’t want to blow up this update.)

Third, another question was on the surprisingly poor performance of the TTFF* pattern. The TTFF* pattern has a short period, and as such should be an easy case for the branch prediction algorithms.

However, the problem is that modern processors don’t track history for each branching instruction separately. Instead, they either track global history of all branches, or they have several history slots, each potentially shared by multiple branching instructions. Or, they can use some combination of these tricks, together with other techniques.

So, the TTFF pattern in the if-statement may not be TTFF by the time it gets to the branch predictor. It may get interleaved with other branches (there are 2 branching instructions in the body of a for-loop), and possibly approximated in other ways too. But, I don’t claim to be an expert on what precisely each processor does, and if someone reading this has an authoritative reference to how different processors behave (esp. Intel Core2 that I tested on), please let me know in comments.

Read more of my articles:

Gallery of processor cache effects

How GPU came to be used for general computation

What really happens when you navigate to a URL

Self-printing Game of Life in C#

And if you like my blog, subscribe!

Interesting insight. Does it hold true for any language? Seems that it may not. Would be interesting to see the difference in Flash AS3, JS, C# that it can make.

No. This is not language dependent. The compiler might make optimizations, like loop-unrolling that affects this performance, but the branch-prediction is very much encoded in hardware.

This also means that you will get different results depending on your processor.

Just as gottlieb says – branch prediction is a feature of the hardware, and so the compiler cannot escape it.

Of course, if you compile the same program with different compilers, you may well see different behavior of the branch prediction. The performance characteristics very much depend on the specific instructions emitted by the compiler, but branch prediction is always a factor.

wonderwhy-er: Why would this be any different in the languages you mention? Branchprediction happens at the hardware level and the only way to not have this “issue” is to use a language with out conditional statements or jumps. (JIT’ed languages can optimize on the fly though, to help the prediction, but you will still have misspredictions)

Of course, the thing that hasn’t been mentioned is that there is a way of avoiding predication for truly unpredictable conditions.

It’s not perfect, but if the instruction is “do X or do Y”, then you can tell the computer to do both, and pick the one that was right at a later stage. This can also have some performance implications, just to make life fun. (One of the key ones is that waiting for the ‘right’ answer can stall things as well, but it’s usually cheaper than a branch misprediction)

Pretty much all processors have some form of predication nowadays, although few are as mad on it as the ARM (other than the Itanium, which dials it up to 11).

Sure you didn’t just measure the performance impact of the indirect branch predictor (and not the standard branch predictor)? As the other posters pointed out in asking about the language impact, if you used an interpreted language (and not something that went straight to machine code), its possible the interpreters instruction dispatch mechanism is causing indirect branches to occur on the executed code path (which modern processors suck at predicting). If this is the case, than language choice *does* impact what the hardware sees. Paper from a few years back talking about this that I just found by googling (http://citeseerx.ist.psu.edu/v......1.14.5161).

Compiler technology does play a part in branch prediciton. For instance GCC has macros for encoding branch prediction information : likey & unlikely

http://kerneltrap.org/node/4705

These people saw 10% gain in their Scheme

http://people.csail.mit.edu/ja.....ter-branch

And this concerns reducing power consumption using Branch Prediction Prediction !

http://www.eecg.toronto.edu/~m.....iccd02.pdf

I had an assignment back in one of my computer architecture classes where I implemented various branch prediction schemes by modifying the simplescaler source (http://www.simplescalar.com/), and testing their performance on various code samples.

I recall using:

-Single branch counter where a specific number of bits was used to count up or down and then determine weather the branch instruction was predicted to be STRONGLY/WEAKLY TAKEN/NOT-TAKEN.

-Building on this there were a table of branch counters that provided more granularity.

-Computed table method with a single branch counter to determine whether

-Table method but with FIFO like counters

-and others

Like some have said I think it is language dependent in some cases – typically all compiled languages benefit from this, but not all interpreted languages. the instructions that happen to make the interpreter work will completely undo branch prediction in naive implementations, but its certainly possible to work it so that the only branches are the ones forced by the code to be interpreted, except in certain edge cases, and only then if you want to be especially robust. it wouldn’t surprise me if the more popular interpreted languages allow for this – particularly the lower level ones like JVM.

Great article Igor!

Results from Luajit – http://gist.github.com/413482

D:\p\badif>..\luajit\src\luajit badif.lua

(i & 0x80000000) == 0 time: 1.057

(i & 0xFFFFFFFF) == 0 time: 0.696

(i & 0x00000001) == 0 time: 3.682

(i & 0x00000003) == 0 time: 5.339

(i & 0x00000002) == 0 time: 3.686

(i & 0x00000004) == 0 time: 3.683

(i & 0x00000008) == 0 time: 3.686

(i & 0x00000010) == 0 time: 3.856

> for (int i = 0; i < max; i++) if () sum++;

Problem #1, when is false, sum++ is NOT executed, so what you may me measuring is the cost of doing/not doing sum++. This alone may explain why if (false) is so much faster than if (true).

Problem #2, regardless of whether the test holds true or false an expression like (i & 0x8000000) requires a 4 bytes litteral fetch from the instruction stream whereas an expression like (i & 2) or (i & 4) etc … might be encoded in a single byte or less of the instruction, depending on the architecture. Whereas the former needs an additional memory fetch (and potentially a very costly cache miss) the later can just proceed.

For such statements to be really significant, you would need to be more precise about the methodolgy you used, what the generated code looked like, how you did perform the measurement itself.

In other words it is way too easy to jump to conclusions …

verec:

– Regarding “Problem #1″: Clearly, the number of increments is not the dominant factor. All true: 322ms. All false: 276ms. A mixture of true and false: 1675ms.

I thought that this point was sufficiently obvious, and so I skipped the explanation for brevity.

– I looked at the generated x86 assembly instructions to make sure that different constants result in the same assembly instructions generated, sans the constant values.

– I looked at various processor counters to further understand the role of branch prediction behind the slowdown.

I didn’t include the x86 assembly in the article to keep the article easy to read. I’ll probably include assembly code in future articles, since some people seem to assume that I am measuring the wrong thing (measurements of hardware effects are very tricky, so perhaps it is not an unreasonable worry).

Just for fun submitted few more tests:

Common Lisp Version (LispWorks 6.01 32-bit Windows) – http://gist.github.com/414081

CL-USER 101 > (test-fun)

(i & 0x80000000) == 0 time: 0.687

(i & 0xFFFFFFFF) == 0 time: 0.671

(i & 0x00000001) == 0 time: 0.733

(i & 0x00000003) == 0 time: 0.795

(i & 0x00000002) == 0 time: 0.765

(i & 0x00000004) == 0 time: 0.764

(i & 0x00000008) == 0 time: 0.827

(i & 0x00000010) == 0 time: 0.983

MSVC & GCC – http://gist.github.com/414091

Microsoft (R) 32-bit C/C++ Optimizing Compiler Version 15.00.30729.01 for 80×86

C:\> cl -O2 badif.c

C:\> badif

(i & 0x80000000) == 0 time: 0.674000

(i & 0xFFFFFFFF) == 0 time: 0.677000

(i & 0x00000001) == 0 time: 0.677000

(i & 0x00000003) == 0 time: 0.674000

(i & 0x00000002) == 0 time: 0.691000

(i & 0x00000008) == 0 time: 1.051000

(i & 0x00000010) == 0 time: 1.232000

Target: i686-pc-cygwin

gcc version 4.3.4 20090804 (release) 1 (GCC)

c:\>sh -c “gcc -O9 badif.c -o badif-gcc.exe”

c:\>badif-gcc

(i & 0x80000000) == 0 time: 0.000000

(i & 0xFFFFFFFF) == 0 time: 0.702000

(i & 0x00000001) == 0 time: 1.060000

(i & 0x00000003) == 0 time: 1.046000

(i & 0x00000002) == 0 time: 1.060000

(i & 0x00000008) == 0 time: 1.061000

(i & 0x00000010) == 0 time: 1.030000

Could you recommend for me some books about this topic? I really want to read and know more about it. Thank you so much !

Zun, check out this book: http://www.amazon.com/Computer.....123704901/

From what people say, this is the best book on the detais of CPU and memory. I didn’t get to it myself yet, but that’s the book to look at if you want to read more on this topic.

Thank you so much. I will get one for me

You should be aware that A Quantitative Approach is hardcore (but very, very good). If you are new to this stuff, you might want to consider http://www.amazon.co.uk/Comput.....038;sr=1-1 from the same authors or perhaps http://www.amazon.co.uk/Inside.....038;sr=1-1.

[…] Fast and slow if-statements: branch prediction in modern processors […]

Worrying about branch prediction is most profitable in tight, heavily executed loops. Yet, one can “optimize” in some other cases by recalling that in the absence of branch prediction state (e.g. the first time a particular branch is evaluated) Pentiums predict backwards branches as taken (the common case of loops) but forwards branches as not taken. Hence, it may be beneficial to put the common case as the “true” part of a conditional branch, to increase prediction accuracy. For example:

if (rare boundary cases does not hold)

do common stuff

else

handle it

Instead of

if (rare boundary case)

handle it

else

do common stuff

W.r.t predication is useful in some cases but creates data dependencies that may stall the pipeline, as a predicated instruction waits for its condition’s evaluation to complete. A successfully predicted branch breaks such “dependency chains”. Predication works best when it replaces hard-to-predict branches.

Hello, very good article!

I did my own tests in C# .NET 4.0 Core i5 3.2 Ghz

const long count = 100000000;

var operands = new uint[]

{0x80000000,0xffffffff,1, 2, 3, 4, 8, 6,5,7,9,10,11,111};

foreach (var operand in operands)

{

var start = DateTime.Now;

for (int i = 0; i < count; i++)

{

if ((i & operand) == 0) ;

}

var milliseconds = (DateTime.Now -start).Milliseconds;

Console.WriteLine("{0}: \t{1} ms", operand, milliseconds);

}

All results was between 400~600ms. So I thing it would be JIT which optimize performance for difficult patterns. There are not so big variances for particular patterns.

Can you please given an example to show in which part it take branches and in which part not taken, and also show in example the static and dynamic prediction. Thanks

Everything looks pretty much the same on an i7.

(i & 0x80000000) == 0) => 3634 msec

(i & 0xffffffff) == 0) => 4119 msec

(i & 0x80000001) == 0) => 4149 msec

(i & 0x80000002) == 0) => 4228 msec

(i & 0x80000003) == 0) => 4197 msec

(i & 0x80000004) == 0) => 4180 msec

(i & 0x80000008) == 0) => 4415 msec

(i & 0x80000010) == 0) => 4056 msec

Altered the if-statements a bit for fair competition..

tyftyftyf: A more robust way to reproduce the branch prediction effect is to fill a bool array of say length 128 with either a predictable pattern (1010…) or a random pattern of bits. That example should be more hardware-agnostic.

perhaps, it will be useful to overcome IF-Catastrophe at some cases

http://alg0z.blogspot.ru/2013/02/if-or-no-if.html

[…] those extra branches the code will most likely run slower than the original implementation! Hardware branch prediction is really good at detecting branches following a certain pattern, but not so much with more or less random branches. Furthermore, branch prediction penalties on […]

Hello,

above content is fine, and I understood that

I would like to know about hybrid predictors, in that based on global history it will select either of the one predictor but how it is implemented they didn’t defined well, if you know about it please help me out…

thank you..

I tried to replicate results and on my Haswell all cases are equally fast. It looks like CPUs these days track history of indicidual branch instructions and can detect short repeating patterns.

Number of branch misses go up when condition is (i & 64) or (i & 128), but runtime is rougly the same (10% slowdown).

Kaja47, that is because your compiler likely translates the short branch to predication — it actually executes both branches in parallel and only writes the “correct” one to the register file. For not easily predictable patterns in loops with only a few instructions, your long term speed is greater often times with predication (because executing two instructions all the time is better than flushing a 10 stage pipeline 50% of the time, for instance).

*by parallel I mean ILP, not thread level parallelism

[…] Fast and slow if-statements: branch prediction in modern processors (čísla už neplatí, současná CPU detekují opakující […]

[…] nějakou dobou jsem narazil na článek z roku 2010, který testoval, jak si branch prediction procesorů té doby poradí s různými opakujícími […]

So the conclusion is, when writing code, we do not have to worry too much about prioritizing the branch with the most likelihood?

What happens if a mispredicted branch touches memory that is not cached? Will those instructions actually fetch the memory and execute on them, thereby incurring the cache miss penalty as well as the instruction flush?

compared performance of following two line of code in c++. and can’t see any difference. has things been changed?

for (size_t i = 0; i < loop; ++i) if ((i & 0x80000000) == 0) sum +=i

for (size_t i = 0; i < loop; ++i) if ((i & 2) == 0) sum +=i

actually, with debug on, the first line of code is slower. with -O3, they are pretty much the same.

must use a variable loop instead of a actual number like 10000000, as compiler is smart enough to know that the condition is always true, and if a number is provided, compiler will calculate the result during compile time.

I used gcc5.3 on SL6 Intel(R) Xeon(R) CPU E7- 4820 @ 2.00GHz