Most of my readers will understand that cache is a fast but small type of memory that stores recently accessed memory locations. This description is reasonably accurate, but the “boring” details of how processor caches work can help a lot when trying to understand program performance.

In this blog post, I will use code samples to illustrate various aspects of how caches work, and what is the impact on the performance of real-world programs.

The examples are in C#, but the language choice has little impact on the performance scores and the conclusions they lead to.

Example 1: Memory accesses and performance

How much faster do you expect Loop 2 to run, compared Loop 1?

int[] arr = new int[64 * 1024 * 1024]; // Loop 1 for (int i = 0; i < arr.Length; i++) arr[i] *= 3; // Loop 2 for (int i = 0; i < arr.Length; i += 16) arr[i] *= 3;

The first loop multiplies every value in the array by 3, and the second loop multiplies only every 16-th. The second loop only does about 6% of the work of the first loop, but on modern machines, the two for-loops take about the same time: 80 and 78 ms respectively on my machine.

The reason why the loops take the same amount of time has to do with memory. The running time of these loops is dominated by the memory accesses to the array, not by the integer multiplications. And, as I’ll explain on Example 2, the hardware will perform the same main memory accesses for the two loops.

Example 2: Impact of cache lines

Let’s explore this example deeper. We will try other step values, not just 1 and 16:

for (int i = 0; i < arr.Length; i += K) arr[i] *= 3;

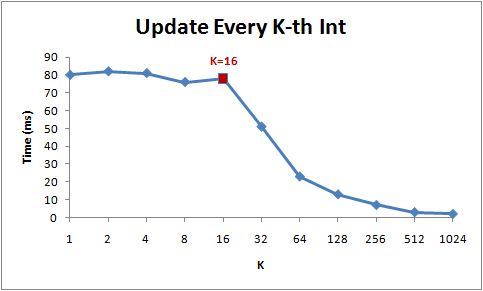

Here are the running times of this loop for different step values (K):

Notice that while step is in the range from 1 to 16, the running time of the for-loop hardly changes. But from 16 onwards, the running time is halved each time we double the step.

The reason behind this is that today’s CPUs do not access memory byte by byte. Instead, they fetch memory in chunks of (typically) 64 bytes, called cache lines. When you read a particular memory location, the entire cache line is fetched from the main memory into the cache. And, accessing other values from the same cache line is cheap!

Since 16 ints take up 64 bytes (one cache line), for-loops with a step between 1 and 16 have to touch the same number of cache lines: all of the cache lines in the array. But once the step is 32, we’ll only touch roughly every other cache line, and once it is 64, only every fourth.

Understanding of cache lines can be important for certain types of program optimizations. For example, alignment of data may determine whether an operation touches one or two cache lines. As we saw in the example above, this can easily mean that in the misaligned case, the operation will be twice slower.

Example 3: L1 and L2 cache sizes

Today’s computers come with two or three levels of caches, usually called L1, L2 and possibly L3. If you want to know the sizes of the different caches, you can use the CoreInfo SysInternals tool, or use the GetLogicalProcessorInfo Windows API call. Both methods will also tell you the cache line sizes, in addition to the cache sizes.

On my machine, CoreInfo reports that I have a 32kB L1 data cache, a 32kB L1 instruction cache, and a 4MB L2 data cache. The L1 caches are per-core, and the L2 caches are shared between pairs of cores:

Logical Processor to Cache Map: *--- Data Cache 0, Level 1, 32 KB, Assoc 8, LineSize 64 *--- Instruction Cache 0, Level 1, 32 KB, Assoc 8, LineSize 64 -*-- Data Cache 1, Level 1, 32 KB, Assoc 8, LineSize 64 -*-- Instruction Cache 1, Level 1, 32 KB, Assoc 8, LineSize 64 **-- Unified Cache 0, Level 2, 4 MB, Assoc 16, LineSize 64 --*- Data Cache 2, Level 1, 32 KB, Assoc 8, LineSize 64 --*- Instruction Cache 2, Level 1, 32 KB, Assoc 8, LineSize 64 ---* Data Cache 3, Level 1, 32 KB, Assoc 8, LineSize 64 ---* Instruction Cache 3, Level 1, 32 KB, Assoc 8, LineSize 64 --** Unified Cache 1, Level 2, 4 MB, Assoc 16, LineSize 64

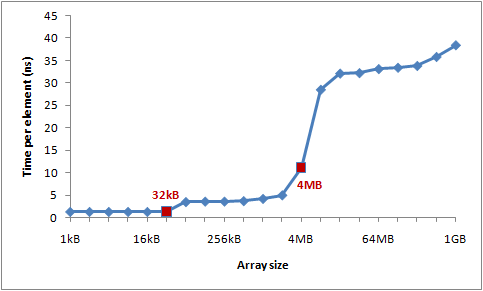

Let’s verify these numbers by an experiment. To do that, we’ll step over an array incrementing every 16th integer – a cheap way to modify every cache line. When we reach the last value, we loop back to the beginning. We’ll experiment with different array sizes, and we should see drops in the performance at the array sizes where the array spills out of one cache level.

Here is the program:

int steps = 64 * 1024 * 1024; // Arbitrary number of steps int lengthMod = arr.Length - 1; for (int i = 0; i < steps; i++) { arr[(i * 16) & lengthMod]++; // (x & lengthMod) is equal to (x % arr.Length) }

And here are the timings:

You can see distinct drops after 32kB and 4MB – the sizes of L1 and L2 caches on my machine.

Example 4: Instruction-level parallelism

Now, let’s take a look at something different. Out of these two loops, which one would you expect to be faster?

int steps = 256 * 1024 * 1024; int[] a = new int[2]; // Loop 1 for (int i=0; i<steps; i++) { a[0]++; a[0]++; } // Loop 2 for (int i=0; i<steps; i++) { a[0]++; a[1]++; }

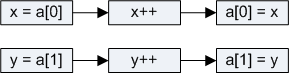

It turns out that the second loop is about twice faster than the first loop, at least on all of the machines I tested. Why? This has to do with the dependencies between operations in the two loop bodies.

In the body of the first loop, operations depend on each other as follows:

![]()

But in the second example, we only have these dependencies:

The modern processor has various parts that have a little bit of parallelism in them: it can access two memory locations in L1 at the same time, or perform two simple arithmetic operations. In the first loop, the processor cannot exploit this instruction-level parallelism, but in the second loop, it can.

[UPDATE]: Many people on reddit are asking about compiler optimizations, and whether { a[0]++; a[0]++; } would just get optimized to { a[0]+=2; }. In fact, the C# compiler and CLR JIT will not do this optimization – not when array accesses are involved. I built all of the tests in release mode (i.e. with optimizations), but I looked at the JIT-ted assembly to verify that optimizations aren’t skewing the results.

Example 5: Cache associativity

One key decision in cache design is whether each chunk of main memory can be stored in any cache slot, or in just some of them.

There are three possible approaches to mapping cache slots to memory chunks:

- Direct mapped cache

Each memory chunk can only be stored only in one particular slot in the cache. One simple solution is to map the chunk with index chunk_index to cache slot (chunk_index % cache_slots). Two memory chunks that map to the same slot cannot be stored simultaneously in the cache.

- N-way set associative cache

Each memory chunk can be stored in any one of N particular slots in the cache. As an example, in a 16-way cache, each memory chunk can be stored in 16 different cache slots. Commonly, chunks with indices with the same lowest order bits will all share 16 slots.

- Fully associative cache

Each memory chunk can be stored in any slot in the cache. Effectively, the cache operates like a hash table.

Direct mapped caches can suffer from conflicts – when multiple values compete for the same slot in the cache, they keep evicting each other out, and the hit rate plummets. On the other hand, fully associative caches are complicated and costly to implement in the hardware. N-way set associative caches are the typical solution for processor caches, as they make a good trade off between implementation simplicity and good hit rate.

For example, the 4MB L2 cache on my machine is 16-way associative. All 64-byte memory chunks are partitioned into sets (based on the lowest order bits of the chunk index), and chunks in the same set compete for 16 slots in the L2 cache.

Since the L2 cache has 65,536 slots, and each set will need 16 slots in the cache, we will have 4,096 sets. So, the lowest 12 bits of the chunk index will determine which set the chunk belongs to (212 = 4,096). As a result, cache lines at addresses that differ by a multiple of 262,144 bytes (4096 * 64) will compete for the same slot in the cache. The cache on my machine can hold at most 16 such cache lines.

In order for the effects of cache associativity to become apparent, I need to repeatedly access more than 16 elements from the same set. I will demonstrate this using the following method:

public static long UpdateEveryKthByte(byte[] arr, int K) { Stopwatch sw = Stopwatch.StartNew(); const int rep = 1024*1024; // Number of iterations – arbitrary int p = 0; for (int i = 0; i < rep; i++) { arr[p]++; p += K; if (p >= arr.Length) p = 0; } sw.Stop(); return sw.ElapsedMilliseconds; }

This method increments every K-th value in the array. Once the it reaches the end of the array, it starts again from the beginning. After running sufficiently long (2^20 steps), the loop stops.

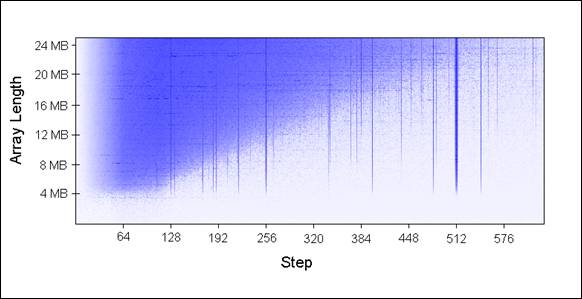

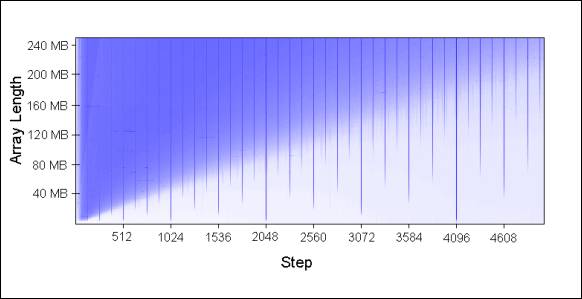

I ran UpdateEveryKthByte() with different array sizes (in 1MB increments) and different step sizes. Here is a plot of the results, with blue representing long running time, and white representing short:

The blue areas (long running times) are cases where the updated values could not be simultaneously held in the cache as we repeatedly iterated over them. The bright blue areas correspond to running times of ~80 ms, and the nearly white areas to ~10 ms.

Let’s explain the blue parts of the chart:

- Why the vertical lines?The vertical lines show the step values that touch too many memory locations (>16) from the same set. For those steps, we cannot simultaneously hold all touched values in the 16-way associative cache on my machine.

Some bad step values are powers of two: 256 and 512. As an example, consider step 512 on an 8MB array. An 8MB cache line contains 32 values that are spaced by 262,144 bytes apart. All of those values will be updated by each pass of our loop, because 512 divides 262,144.

And since 32 > 16, those 32 values will keep competing for the same 16 slots in the cache.

Some values that are not powers of two are simply unfortunate, and will end up visiting disproportionately many values from the same set. Those step values will also show up as as blue lines.

- Why do the vertical lines stop at 4MB array length?On arrays of 4MB or less, a 16-way associative cache is just as good as a fully associative one.

A 16-way associative cache can hold at most 16 cache lines that are a multiple of 262,144 bytes apart. There is no set of 17 or more cache lines all aligned on 262,144-byte boundaries within 4MB, because 16 * 262,144 = 4,194,304.

- Why the blue triangle in upper left?In the triangle area, we cannot hold all necessary data in cache simultaneously … not due to the associativity, but simply because of the L2 cache size limit.

For example, consider the array length 16MB with step 128. We are repeatedly updating every 128th byte in the array, which means that we touch every other 64-byte memory chunk. To store every other cache line of a 16MB array, we’d need 8MB cache. But, my machine only has 4MB of cache.

Even if the 4MB cache on my machine was fully associative, it still wouldn’t be able to hold 8MB of data.

- Why does the triangle fade out in the left?Notice that the gradient goes from 0 to 64 bytes – one cache line! As explained in examples 1 and 2, additional accesses to same cache line are nearly free. For example, when stepping by 16 bytes, it will take 4 steps to get to the next cache line. So, we get four memory accesses for the price of one.

Since the number of steps is the same for all cases, a cheaper step results in a shorter running time.

These patterns continue to hold as you extend the chart:

Cache associativity is interesting to understand and can certainly be demonstrated, but it tends to be less of a problem compared to the other issues discussed in this article. It is certainly not something that should be at the forefront of your mind as you write programs.

Example 6: False cache line sharing

On multi-core machines, caches encounter another problem – consistency. Different cores have fully or partly separate caches. On my machine, L1 caches are separate (as is common), and there are two pairs of processors, each pair sharing an L2 cache. While the details vary, a modern multi-core machine will have a multi-level cache hierarchy, where the faster and smaller caches belong to individual processors.

When one processor modifies a value in its cache, other processors cannot use the old value anymore. That memory location will be invalidated in all of the caches. Furthermore, since caches operate on the granularity of cache lines and not individual bytes, the entire cache line will be invalidated in all caches!

To demonstrate this issue, consider this example:

private static int[] s_counter = new int[1024]; private void UpdateCounter(int position) { for (int j = 0; j < 100000000; j++) { s_counter[position] = s_counter[position] + 3; } }

On my quad-core machine, if I call UpdateCounter with parameters 0,1,2,3 from four different threads, it will take 4.3 seconds until all threads are done.

On the other hand, if I call UpdateCounter with parameters 16,32,48,64 the operation will be done in 0.28 seconds!

Why? In the first case, all four values are very likely to end up on the same cache line. Each time a core increments the counter, it invalidates the cache line that holds all four counters. All other cores will suffer a cache miss the next time they access their own counters. This kind of thread behavior effectively disables caches, crippling the program’s performance.

Example 7: Hardware complexities

Even when you know the basics of how caches work, the hardware will still sometimes surprise you. Different processors differ in optimizations, heuristics, and subtle details of how they do things.

On some processors, L1 cache can process two accesses in parallel if they access cache lines from different banks, and serially if they belong to the same bank. Also, processors can surprise you with clever optimizations. For example, the false-sharing example that I’ve used on several machines in the past did not work well on my machine without tweaks – my home machine can optimize the execution in the simplest cases to reduce the cache invalidations.

Here is one odd example of “hardware weirdness”:

private static int A, B, C, D, E, F, G; private static void Weirdness() { for (int i = 0; i < 200000000; i++) { <something> } }

When I substitute three different blocks for “<something>”, I get these timings:

| <something> | Time |

| A++; B++; C++; D++; | 719 ms |

| A++; C++; E++; G++; | 448 ms |

| A++; C++; | 518 ms |

Incrementing fields A,B,C,D takes longer than incrementing fields A,C,E,G. And what’s even weirder, incrementing just A and C takes longer than increment A and C and E and G!

I don’t know for sure what is the reason behind these numbers, but I suspect it is related to memory banks. If someone can explain these numbers, I’d be very curious to hear about it.

The lesson of this example is that can be difficult to fully predict hardware performance. There is a lot that you can predict, but ultimately, it is very important to measure and verify your assumptions.

Read more of my articles:

Human heart is a Turing machine, research on XBox 360 shows. Wait, what?

Self-printing Game of Life in C#

Efficient auto-complete with a ternary search tree

Numbers that cannot be computed

And if you like my blog, subscribe!

Conclusion

Hopefully all of this helps you understand how caches work, and apply that knowledge when tuning your programs.

In Example 4 above, for the first part, it appears the compiler optimizes away the loop entirely, replacing it with the equivalent of “a[0] += 2*steps;”.

Having a[4] as the array and comparing body

{ a[0]++; a[0]++; a[2]++; a[2]++ } with

{ a[0]++; a[1]++; a[2]++; a[3]++ } appears to illustrate the issue better.

Does your home computer have a 64-bit processor (and are you using it in 64-bit mode)?

If so, in Example 7, I suspect that the (probably 32-bit) ints will be stored in single 64-bit memory locations, where AB, CD, EF, and G- comprise four different memory locations. I reckon, therefore, that

A++; C++; E++; G++;

will be parallelised more than

A++; B++; C++; D++;

thanks to instruction level parallelism (Example 4).

As for

A++; C++; E++; G++;

vs

A++; C++;

I have no idea, other than suggesting that they take the same amount of time (because of instruction level parallelism) and the discrepancy you are seeing is some unrelated processing overhead.

Thanks for this interesting investigation into caches and processor optimisations!

Oh, it’s just AWESOME.

I had made a lecture on this post this saturday.

I’m lecturing computer graphics, but students have to know it.

I had never seen better explanation of this topic, even in Richter`s books.

Sometimes cache effects kill all output of big Oh analysis.

Very interesting, but is it a big diff between Intel and AMD?

On my X2 machine, I tried example #1:

The += 16 takes 1/3 as long as the += 1. (+= 8 takes about the same as += 16) += 32 takes even shorter time.

2 gulgi:

On my centrino laptop this:

int[] arr = new int[64 * 1024 * 1024];

for (int x = 1; x < 65; x <<=1)

{

Stopwatch sw = new Stopwatch();

sw.Start();

for (int i = 0; i < arr.Length; i+=x) arr[i] *= 3;

sw.Stop();

Console.WriteLine(sw.ElapsedMilliseconds);

}

produces output:

908

417

390

389

390

217

106

everything is not so clear, by close enough to described behavior

@gulgi:

I’m not that knowledgeable about this stuff, but it is possible that your computer has 32-byte cache lines (instead of Igor’s 64 bytes.)

On a very recent Intel(R) Xeon(R) W3520 @ 2.67GHz, I got similar results. The graph at end of example 3 I have created for my system perfectly shows the L1, L2, and L3 cache level, at respectively 1.1, 1.5, 2.1, and 5.5 nanoseconds per access.

But this is when reading only 1 integer value (32 bits) in a single cache line comprising 16 of them. Whenever I try to do something with the other 15 integers (which is a better approach of the real use of memory), anything as simple as summing them up makes the CPU time becoming larger than memory latency time.

Maybe memory latency and throughput have enhanced a lot recently, but in the end, it looks to me that there’s no real need to care about this.

@Didier Trosset:

“Real need” – it’s a thing beyond CS, it’s philosophy.

There is no real need to understand how computer works to write programs. But this knowledge dramaticaly increases probability of creating good programs.

Most of things we discover will be useless at most of time we are working on our projects. But sometimes something of this “useless things” are very usefull.

Euler’s totient function was nearly useless to engineers for about 200 years, but in 1977 it became very important to RSA.

Cache effects do not matters in ERP, CMS and many other systems. But, for exampe, in shaders you cannot avoid them. It’s better to take them into account if you create DBMS or graphics rendering engine.

@Andrew Borodin

BTW, I can explain the figures you got from your program in June 6th comment.

908, 417, 390, 389, 390, 217, 106

The value 390 is limited by the memory accesses. As such, when you traverse your data 4 by 4, 8 by 8, or 16 by 16 (32 bits integer values) the limitation is memory.

When you traverse by 32 (217), or 64 (106), then you only use 1 cache line (64 bytes) out of 2, or out of 4. This is showed by the last 2 values, approximately divided by 2, and by 4 from the 390 limit.

For the first two ones, the limitation is not memory, but processing. It simply shows that computing `arr[i] *= 3` 16 times takes longer than accessing a 64 bytes memory line (908). Computing it 8 times divides the time by 2 (417), and is almost equal to the memory access limit.

I fully agree with your last comment.

@Didier Trosset

Still I can’t understand first two values. Why processing of all items is 16 times slower then processing only odd items?

processing of all is 908-390 = 520

processing of odd is 417 – 390 = 30

M.b. I should statistically clean results..

even this

http://msdn.microsoft.com/en-u.....73852.aspx

does not explains first two values

@Andrew Borodin

I am not certain that you can calculate as you do the time of processing. I am pretty certain that the processors include some memory read-ahead mechanism. This mechanism is at work with the current code. And my reasoning is that the next few cache lines are fetched in advance when you simply read forward the memory. Hence, my thinking that 417 is the half of 908 (for very large values of 417 ) because limitation is only computation time here.

) because limitation is only computation time here.

I remember Herb Sutter presenting test of algorithms that were using memory, both sequentially, and randomly, and that the results were _very_ different, and are explained by this read-ahead mechanism.

First-class story it is actually. My girlfriend has been seeking for this info.

I wonder if this is applicable in C#?

Compare slides 16 and 30:

http://www.slideshare.net/DICE.....ted-design

For case #7, did you consider the fact that the compiler may use SSE instructions when you operate on 4 different integers ? That would explain why incrementing 4 numbers (which would be 1 SSE instruction) can be faster that just 2 (which would be 2 regular instructions).

Maybe you could look at the assembly generated, and tell us what it says.

My processor doesn’t show that weird behavior.

Well I asked an intel engineer in the end and got this reponse:

“It’s clearly something to do with which instructions end up in which execution units, how quickly the machine spots a store-hit-load problem, and how quickly and elegantly it deals with unrolling the speculative execution to cope with it (or if it takes multiple cycles because of some internal conflict). But that said – you’d need a very detailed pipetrace and simulator to figure this out. Predicting out-of-order instruction processing in these pipelines is way too hard to do on paper, even for the people who designed the machines. For laymen – no hope in hell. Sorry!”

@Goz: Yeah, modern processor pipelines are complicated. Thanks for sharing the response!

Thanks for sharing the response!

In general, I would do these experiments using in-lined assembly and loops which are unrolled to some degree. It would be interesting to see the assembly for the A..G case. Also the addresses of A through G are of interest. Are A through G on the same cache line? Do the addresses change when the “code” changes and you recompile? Possibly write buffer effects???

This reminds me of a of execute a sequence of instructions on a Sparc machine and then executing the exact same set plus a few more instructions and doing this in a loop. The longer sequence was always slightly faster. It was nothing more than branch prediction helped by the inner loop going one extra time.

A technique that I often use is local variable caching. If a loop, and especially an inner loop, uses lots of variables local to the class, static, defined in superclasses, etc., then those variables and the ones defined in the function itself, are probably all in different cache lines. When you make all used values (pointers in case of arrays/instances) local to the function, they’ll all be in the same cache line for sure. It even measurably speeds up algorithms that iterate over arrays of over a hundred MB (tested with Java).

Hi I tested example 1 for stride of 1, 2, 4, 8,…1024 with 1048576 int(4B) array elements,1048576 it was the maximum number of array that I could define in c++, fedora 17 x64 with 3G of Ram 2M L2 cache 32K L1 .I did timing with papi tool in nanosecond but as the step increased up time reduced also the number of cycle reduce too.

I think Cycle reduction is because of stride,as the stride become wider less load, multiplication,store etc happens(less element will be touched for multiplication).But About time I have no Idea. Unless that 1048576(4MB) is not so big kernel.Dose anybody have any Idea?.

Great post. Thanks!

Based on your article I have written a very simple program given below.The result that I find is as follows.

./cache

cpu time for loop 1 0.460000 secs.

cpu time for loop 2 (j = 8) 0.050000 secs.

cpu time for loop 2 (j = 9) 0.050000 secs.

cpu time for loop 2 (j = 10) 0.050000 secs.

cpu time for loop 2 (j = 11) 0.050000 secs.

cpu time for loop 2 (j = 12) 0.040000 secs.

cpu time for loop 2 (j = 13) 0.050000 secs.

cpu time for loop 2 (j = 14) 0.050000 secs.

cpu time for loop 2 (j = 15) 0.040000 secs.

cpu time for loop 2 (j = 16) 0.050000 secs.

cpu time for loop 2 (j = 17) 0.040000 secs.

cpu time for loop 2 (j = 18) 0.050000 secs.

cpu time for loop 2 (j = 19) 0.040000 secs.

cpu time for loop 2 (j = 20) 0.040000 secs.

cpu time for loop 2 (j = 21) 0.040000 secs.

cpu time for loop 2 (j = 22) 0.040000 secs.

cpu time for loop 2 (j = 23) 0.040000 secs.

cpu time for loop 2 (j = 24) 0.030000 secs.

cpu time for loop 2 (j = 25) 0.040000 secs.

cpu time for loop 2 (j = 26) 0.030000 secs.

cpu time for loop 2 (j = 27) 0.040000 secs.

cpu time for loop 2 (j = 28) 0.030000 secs.

cpu time for loop 2 (j = 29) 0.040000 secs.

cpu time for loop 2 (j = 30) 0.030000 secs.

The first loop multiplies every value in the array by 3, and the second loop multiplies > only every 16-th. The second loop only does about 6% of the work of the first loop, but on modern machines, the two for-loops take about the same time: 80 and 78 ms respectively on my machine.

This doesn’t seem to be the case on my machine.As you can see the time for the execution of

loop 1 is 0.46 seconds.

and that for

loop 2 is 0.03 seconds or 0.04 seconds or 0.05 seconds

for different values of j.

Can someone explain why this is happening? I would greatly appreciate it.

#include

#include

#include

#include

#include

#define MAX_SIZE (64*1024*1024)

int main()

{

clock_t start, end;

double cpu_time;

int i = 0;

int j = 0;

/*MAX_SIZE array is too big for stack.This is an unfortunate rough edge of the way the stack works.

It lives in a fixed-size buffer, set by the program executable’s configuration according to the

operating system, but its actual size is seldom checked against the available space.*/

/*int arr[MAX_SIZE];*/

int *arr = (int*)malloc(MAX_SIZE * sizeof(int));

/*cpu clock ticks count start*/

start = clock();

/*Loop 1*/

for (i = 0; i < MAX_SIZE; i++) arr[i] *= 3;

/*cpu clock ticks count stop*/

end = clock();

cpu_time = ((double) (end – start)) / CLOCKS_PER_SEC;

printf("cpu time for loop 1 %.6f secs.\n",cpu_time);

for (j = 8 ; j < 32 ; j++)

{

/*cpu clock ticks count start*/

start = clock();

/* Loop 2*/

for (i = 0; i < MAX_SIZE; i += j) arr[i] *= 3;

/*cpu clock ticks count stop*/

end = clock();

cpu_time = ((double) (end – start)) / CLOCKS_PER_SEC;

printf("cpu time for loop 2 (j = %d) %.6f secs.\n",j,cpu_time);

}

return 0;

}

from a quick look it seems that it’s programming error. The second loop is embedded in another loop and in each of the outer loop you reset the clock. I did not debug it, just from review. Check it…

Sorry, I was confused due to the lack of alignment… It is not a programming problem

I also did a test with a small piece of C++ code:

#include <time.h>

#include <stdio.h>

#define N 64*1024*1024

int main() {

int* arr;

arr = new int[N];

for (int i = 0; i < N; i++) {

// Initialize

arr[i] = 7986;

}

for (int dn=1; dn < 32; dn ++) {

clock_t t;

t = clock();

for (int i = 0; i < N; i += dn) {

arr[i] *= 3;

}

t = clock() - t;

printf("dn = %3d, %10.4f seconds\n", dn, (float)t/CLOCKS_PER_SEC);

}

return 0;

}

I ran the above code with a MacBook Pro.

The result seems to be at odds with Example 1 and 2. The run time for each increment value (namely dn, from 1 to 31) seems to be very close to inversely proportional to dn:

[…] Gallery of Processor Cache Effects […]

Hi was curious if anyone tried the example 3. I’m not sure how that example works, it looks very brief.

Thanks

Tested this out on mac and saw an inverse relation of step size and running time even with step size <16(I have verified that the cache line size=64)

I checked the cache hit rates using valgrind:

step size =1

==11871== D refs: 403,110,653 (335,886,752 rd + 67,223,901 wr)

==11871== D1 misses: 4,205,730 ( 4,204,059 rd + 1,671 wr)

==11871== LLd misses: 4,201,776 ( 4,200,523 rd + 1,253 wr)

==11871== D1 miss rate: 1.0% ( 1.2% + 0.0% )

==11871== LLd miss rate: 1.0% ( 1.2% + 0.0% )

==11871==

==11871== LL refs: 4,207,039 ( 4,205,368 rd + 1,671 wr)

==11871== LL misses: 4,203,070 ( 4,201,817 rd + 1,253 wr)

==11871== LL miss rate: 0.3% ( 0.3% + 0.0% )

step size =15

–11940– warning: L3 cache found, using its data for the LL simulation.

==11940== D refs: 27,301,019 (22,712,057 rd + 4,588,962 wr)

==11940== D1 misses: 4,205,730 ( 4,204,059 rd + 1,671 wr)

==11940== LLd misses: 4,201,776 ( 4,200,523 rd + 1,253 wr)

==11940== D1 miss rate: 15.4% ( 18.5% + 0.0% )

==11940== LLd miss rate: 15.3% ( 18.4% + 0.0% )

==11940==

==11940== LL refs: 4,207,039 ( 4,205,368 rd + 1,671 wr)

==11940== LL misses: 4,203,070 ( 4,201,817 rd + 1,253 wr)

==11940== LL miss rate: 4.6% ( 4.8% + 0.0% )

The LL misses,LLd misses values are the same(confirming the precondition in example 1 and 2) , however the running times drastically differ.

The only inference I can draw is that memory access isn't dominating run time on my machine as we expect it to be.

I’ve tried to reproduce results of pt. 3 and couldn’t, here’s my program, which shows different pattern: https://gist.github.com/anonymous/e326bc9b764e3f04c494a213f704a669

Plus, reasoning confuses me, as cache line size is only 64 bytes, you should be getting more and more misses with every step increase: for step of 1 — no misses per 16 loop iterations (4 bytes per step * 16 = 64), for step of 2 — 1 miss per 16 iterations (8 * 16 = 128), for 4 — 3, for 8 — _7_ (32 * 16 = 64 * 8), etc.

Same problem on Mac in C++ here, cannot reproduce at least 1 and 2, and we were 2 looking at the program to spot bugs. Take results of this post with caution.

Regarding Part 3: The code mentioned can’t defeat a prefetcher as the stride is pretty predictable. You would need a pseudo-random circular pattern and a pointer chasing approach to achieve that.

Thanks for this. I’ve done similar experiments in the past and this is one rationale behind why hardware acceleration can be so effective on problems people assume a CPU should handle well.

Thanks for the great info. I have few questions. How did you calculate those cache misses? How did you setup?

I’m shocked you dared using C# for such a test. The world has lost its mind.

Very useful and very interesting! Thanks for the great post!

hi, i test example1 on my computer and got the below, why the results are not the same as u mentioned

test01 case1, time cost=580

test01 case2, time cost=70

BTW worth noting that those loops do not actually do integer multiplication, the compiler should optimize *=3 into a couple of adds (or a single lea if on x86) plus the loads/stores.

In ex 5 your terminology is a bit inconsistent. You seem to use the word ‘slot’ and ‘line’ interchangeable and you referred to a “8MB cache line” at one point.

Hi was curious if anyone tried the example 3. I’m not sure how that example works, it looks very brief.

Thanks

Hello, is it possible to run these tests on a language like python and get similar results?