Most of my readers will understand that cache is a fast but small type of memory that stores recently accessed memory locations. This description is reasonably accurate, but the “boring” details of how processor caches work can help a lot when trying to understand program performance.

In this blog post, I will use code samples to illustrate various aspects of how caches work, and what is the impact on the performance of real-world programs.

The examples are in C#, but the language choice has little impact on the performance scores and the conclusions they lead to.

Example 1: Memory accesses and performance

How much faster do you expect Loop 2 to run, compared Loop 1?

int[] arr = new int[64 * 1024 * 1024]; // Loop 1 for (int i = 0; i < arr.Length; i++) arr[i] *= 3; // Loop 2 for (int i = 0; i < arr.Length; i += 16) arr[i] *= 3;

The first loop multiplies every value in the array by 3, and the second loop multiplies only every 16-th. The second loop only does about 6% of the work of the first loop, but on modern machines, the two for-loops take about the same time: 80 and 78 ms respectively on my machine.

The reason why the loops take the same amount of time has to do with memory. The running time of these loops is dominated by the memory accesses to the array, not by the integer multiplications. And, as I’ll explain on Example 2, the hardware will perform the same main memory accesses for the two loops.

Example 2: Impact of cache lines

Let’s explore this example deeper. We will try other step values, not just 1 and 16:

for (int i = 0; i < arr.Length; i += K) arr[i] *= 3;

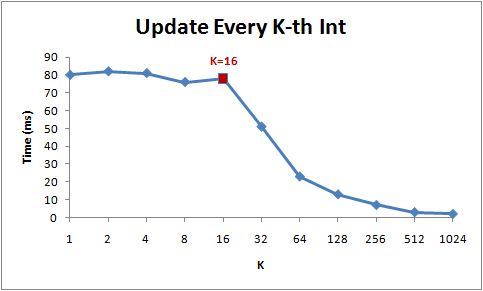

Here are the running times of this loop for different step values (K):

Notice that while step is in the range from 1 to 16, the running time of the for-loop hardly changes. But from 16 onwards, the running time is halved each time we double the step.

The reason behind this is that today’s CPUs do not access memory byte by byte. Instead, they fetch memory in chunks of (typically) 64 bytes, called cache lines. When you read a particular memory location, the entire cache line is fetched from the main memory into the cache. And, accessing other values from the same cache line is cheap!

Since 16 ints take up 64 bytes (one cache line), for-loops with a step between 1 and 16 have to touch the same number of cache lines: all of the cache lines in the array. But once the step is 32, we’ll only touch roughly every other cache line, and once it is 64, only every fourth.

Understanding of cache lines can be important for certain types of program optimizations. For example, alignment of data may determine whether an operation touches one or two cache lines. As we saw in the example above, this can easily mean that in the misaligned case, the operation will be twice slower.

Example 3: L1 and L2 cache sizes

Today’s computers come with two or three levels of caches, usually called L1, L2 and possibly L3. If you want to know the sizes of the different caches, you can use the CoreInfo SysInternals tool, or use the GetLogicalProcessorInfo Windows API call. Both methods will also tell you the cache line sizes, in addition to the cache sizes.

On my machine, CoreInfo reports that I have a 32kB L1 data cache, a 32kB L1 instruction cache, and a 4MB L2 data cache. The L1 caches are per-core, and the L2 caches are shared between pairs of cores:

Logical Processor to Cache Map: *--- Data Cache 0, Level 1, 32 KB, Assoc 8, LineSize 64 *--- Instruction Cache 0, Level 1, 32 KB, Assoc 8, LineSize 64 -*-- Data Cache 1, Level 1, 32 KB, Assoc 8, LineSize 64 -*-- Instruction Cache 1, Level 1, 32 KB, Assoc 8, LineSize 64 **-- Unified Cache 0, Level 2, 4 MB, Assoc 16, LineSize 64 --*- Data Cache 2, Level 1, 32 KB, Assoc 8, LineSize 64 --*- Instruction Cache 2, Level 1, 32 KB, Assoc 8, LineSize 64 ---* Data Cache 3, Level 1, 32 KB, Assoc 8, LineSize 64 ---* Instruction Cache 3, Level 1, 32 KB, Assoc 8, LineSize 64 --** Unified Cache 1, Level 2, 4 MB, Assoc 16, LineSize 64

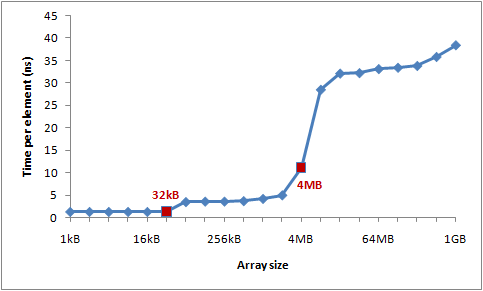

Let’s verify these numbers by an experiment. To do that, we’ll step over an array incrementing every 16th integer – a cheap way to modify every cache line. When we reach the last value, we loop back to the beginning. We’ll experiment with different array sizes, and we should see drops in the performance at the array sizes where the array spills out of one cache level.

Here is the program:

int steps = 64 * 1024 * 1024; // Arbitrary number of steps int lengthMod = arr.Length - 1; for (int i = 0; i < steps; i++) { arr[(i * 16) & lengthMod]++; // (x & lengthMod) is equal to (x % arr.Length) }

And here are the timings:

You can see distinct drops after 32kB and 4MB – the sizes of L1 and L2 caches on my machine.

Example 4: Instruction-level parallelism

Now, let’s take a look at something different. Out of these two loops, which one would you expect to be faster?

int steps = 256 * 1024 * 1024; int[] a = new int[2]; // Loop 1 for (int i=0; i<steps; i++) { a[0]++; a[0]++; } // Loop 2 for (int i=0; i<steps; i++) { a[0]++; a[1]++; }

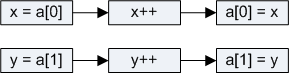

It turns out that the second loop is about twice faster than the first loop, at least on all of the machines I tested. Why? This has to do with the dependencies between operations in the two loop bodies.

In the body of the first loop, operations depend on each other as follows:

![]()

But in the second example, we only have these dependencies:

The modern processor has various parts that have a little bit of parallelism in them: it can access two memory locations in L1 at the same time, or perform two simple arithmetic operations. In the first loop, the processor cannot exploit this instruction-level parallelism, but in the second loop, it can.

[UPDATE]: Many people on reddit are asking about compiler optimizations, and whether { a[0]++; a[0]++; } would just get optimized to { a[0]+=2; }. In fact, the C# compiler and CLR JIT will not do this optimization – not when array accesses are involved. I built all of the tests in release mode (i.e. with optimizations), but I looked at the JIT-ted assembly to verify that optimizations aren’t skewing the results.

Example 5: Cache associativity

One key decision in cache design is whether each chunk of main memory can be stored in any cache slot, or in just some of them.

There are three possible approaches to mapping cache slots to memory chunks:

- Direct mapped cache

Each memory chunk can only be stored only in one particular slot in the cache. One simple solution is to map the chunk with index chunk_index to cache slot (chunk_index % cache_slots). Two memory chunks that map to the same slot cannot be stored simultaneously in the cache.

- N-way set associative cache

Each memory chunk can be stored in any one of N particular slots in the cache. As an example, in a 16-way cache, each memory chunk can be stored in 16 different cache slots. Commonly, chunks with indices with the same lowest order bits will all share 16 slots.

- Fully associative cache

Each memory chunk can be stored in any slot in the cache. Effectively, the cache operates like a hash table.

Direct mapped caches can suffer from conflicts – when multiple values compete for the same slot in the cache, they keep evicting each other out, and the hit rate plummets. On the other hand, fully associative caches are complicated and costly to implement in the hardware. N-way set associative caches are the typical solution for processor caches, as they make a good trade off between implementation simplicity and good hit rate.

For example, the 4MB L2 cache on my machine is 16-way associative. All 64-byte memory chunks are partitioned into sets (based on the lowest order bits of the chunk index), and chunks in the same set compete for 16 slots in the L2 cache.

Since the L2 cache has 65,536 slots, and each set will need 16 slots in the cache, we will have 4,096 sets. So, the lowest 12 bits of the chunk index will determine which set the chunk belongs to (212 = 4,096). As a result, cache lines at addresses that differ by a multiple of 262,144 bytes (4096 * 64) will compete for the same slot in the cache. The cache on my machine can hold at most 16 such cache lines.

In order for the effects of cache associativity to become apparent, I need to repeatedly access more than 16 elements from the same set. I will demonstrate this using the following method:

public static long UpdateEveryKthByte(byte[] arr, int K) { Stopwatch sw = Stopwatch.StartNew(); const int rep = 1024*1024; // Number of iterations – arbitrary int p = 0; for (int i = 0; i < rep; i++) { arr[p]++; p += K; if (p >= arr.Length) p = 0; } sw.Stop(); return sw.ElapsedMilliseconds; }

This method increments every K-th value in the array. Once the it reaches the end of the array, it starts again from the beginning. After running sufficiently long (2^20 steps), the loop stops.

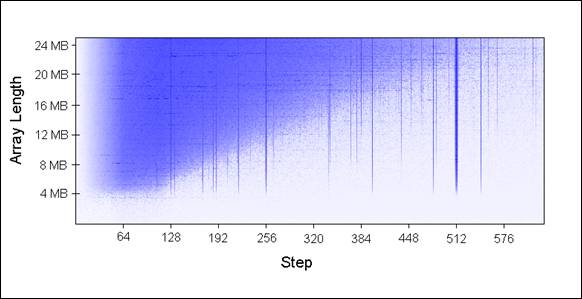

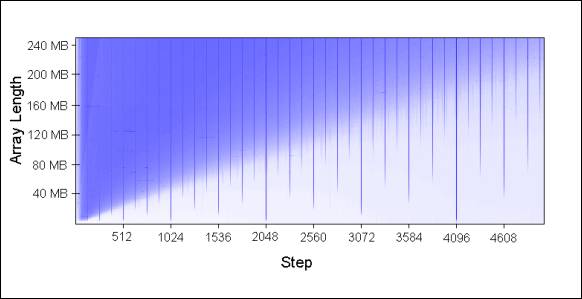

I ran UpdateEveryKthByte() with different array sizes (in 1MB increments) and different step sizes. Here is a plot of the results, with blue representing long running time, and white representing short:

The blue areas (long running times) are cases where the updated values could not be simultaneously held in the cache as we repeatedly iterated over them. The bright blue areas correspond to running times of ~80 ms, and the nearly white areas to ~10 ms.

Let’s explain the blue parts of the chart:

- Why the vertical lines?The vertical lines show the step values that touch too many memory locations (>16) from the same set. For those steps, we cannot simultaneously hold all touched values in the 16-way associative cache on my machine.

Some bad step values are powers of two: 256 and 512. As an example, consider step 512 on an 8MB array. An 8MB cache line contains 32 values that are spaced by 262,144 bytes apart. All of those values will be updated by each pass of our loop, because 512 divides 262,144.

And since 32 > 16, those 32 values will keep competing for the same 16 slots in the cache.

Some values that are not powers of two are simply unfortunate, and will end up visiting disproportionately many values from the same set. Those step values will also show up as as blue lines.

- Why do the vertical lines stop at 4MB array length?On arrays of 4MB or less, a 16-way associative cache is just as good as a fully associative one.

A 16-way associative cache can hold at most 16 cache lines that are a multiple of 262,144 bytes apart. There is no set of 17 or more cache lines all aligned on 262,144-byte boundaries within 4MB, because 16 * 262,144 = 4,194,304.

- Why the blue triangle in upper left?In the triangle area, we cannot hold all necessary data in cache simultaneously … not due to the associativity, but simply because of the L2 cache size limit.

For example, consider the array length 16MB with step 128. We are repeatedly updating every 128th byte in the array, which means that we touch every other 64-byte memory chunk. To store every other cache line of a 16MB array, we’d need 8MB cache. But, my machine only has 4MB of cache.

Even if the 4MB cache on my machine was fully associative, it still wouldn’t be able to hold 8MB of data.

- Why does the triangle fade out in the left?Notice that the gradient goes from 0 to 64 bytes – one cache line! As explained in examples 1 and 2, additional accesses to same cache line are nearly free. For example, when stepping by 16 bytes, it will take 4 steps to get to the next cache line. So, we get four memory accesses for the price of one.

Since the number of steps is the same for all cases, a cheaper step results in a shorter running time.

These patterns continue to hold as you extend the chart:

Cache associativity is interesting to understand and can certainly be demonstrated, but it tends to be less of a problem compared to the other issues discussed in this article. It is certainly not something that should be at the forefront of your mind as you write programs.

Example 6: False cache line sharing

On multi-core machines, caches encounter another problem – consistency. Different cores have fully or partly separate caches. On my machine, L1 caches are separate (as is common), and there are two pairs of processors, each pair sharing an L2 cache. While the details vary, a modern multi-core machine will have a multi-level cache hierarchy, where the faster and smaller caches belong to individual processors.

When one processor modifies a value in its cache, other processors cannot use the old value anymore. That memory location will be invalidated in all of the caches. Furthermore, since caches operate on the granularity of cache lines and not individual bytes, the entire cache line will be invalidated in all caches!

To demonstrate this issue, consider this example:

private static int[] s_counter = new int[1024]; private void UpdateCounter(int position) { for (int j = 0; j < 100000000; j++) { s_counter[position] = s_counter[position] + 3; } }

On my quad-core machine, if I call UpdateCounter with parameters 0,1,2,3 from four different threads, it will take 4.3 seconds until all threads are done.

On the other hand, if I call UpdateCounter with parameters 16,32,48,64 the operation will be done in 0.28 seconds!

Why? In the first case, all four values are very likely to end up on the same cache line. Each time a core increments the counter, it invalidates the cache line that holds all four counters. All other cores will suffer a cache miss the next time they access their own counters. This kind of thread behavior effectively disables caches, crippling the program’s performance.

Example 7: Hardware complexities

Even when you know the basics of how caches work, the hardware will still sometimes surprise you. Different processors differ in optimizations, heuristics, and subtle details of how they do things.

On some processors, L1 cache can process two accesses in parallel if they access cache lines from different banks, and serially if they belong to the same bank. Also, processors can surprise you with clever optimizations. For example, the false-sharing example that I’ve used on several machines in the past did not work well on my machine without tweaks – my home machine can optimize the execution in the simplest cases to reduce the cache invalidations.

Here is one odd example of “hardware weirdness”:

private static int A, B, C, D, E, F, G; private static void Weirdness() { for (int i = 0; i < 200000000; i++) { <something> } }

When I substitute three different blocks for “<something>”, I get these timings:

| <something> | Time |

| A++; B++; C++; D++; | 719 ms |

| A++; C++; E++; G++; | 448 ms |

| A++; C++; | 518 ms |

Incrementing fields A,B,C,D takes longer than incrementing fields A,C,E,G. And what’s even weirder, incrementing just A and C takes longer than increment A and C and E and G!

I don’t know for sure what is the reason behind these numbers, but I suspect it is related to memory banks. If someone can explain these numbers, I’d be very curious to hear about it.

The lesson of this example is that can be difficult to fully predict hardware performance. There is a lot that you can predict, but ultimately, it is very important to measure and verify your assumptions.

Read more of my articles:

Human heart is a Turing machine, research on XBox 360 shows. Wait, what?

Self-printing Game of Life in C#

Efficient auto-complete with a ternary search tree

Numbers that cannot be computed

And if you like my blog, subscribe!

Conclusion

Hopefully all of this helps you understand how caches work, and apply that knowledge when tuning your programs.

Wow! Excellent article. I can’t begin to explain how easy you made that to read and understand. Thanks so much!

Could it be that A++; C++; E++; G++; is being executed as a single SIMD instruction operating on a vector of four 64-bit integers?

[…] full post on Hacker News If you enjoyed this article, please consider sharing it! Tagged with: analysis […]

Just out of curiosity, you built all of this code unoptimized, correct?

Oh and also, for the last code snippet, just have a look at the machine code output between the various cases.

Great article, Igor!

@Tony:

The .NET JIT doesn’t do any SIMD optimizations.

Ben: this was all built with optimized code. I looked at the JIT-ted assembly code to make sure that the compiler didn’t “optimize away” the interesting part of each test.

For the last snippet, there is no interesting difference between the assembly outputs. Each static variable increment is simply an inc instruction. For the A,B,C,D and A,C,E,G cases, the assembly outputs only differ in the memory addresses passed to the inc instructions.

Awesome. Let me know if you want to open a computer science school. I’ll help.

“A++; B++; C++; D++;” vs. “A++; C++; E++; G++;”

“A++; C++; E++; G++;” vs. “A++; C++;”

My guess is that this is due to combination of manipulating half-words on a 64bit CPU and pipeline flushing. The first one is probably that the write back of A and B both depend on the calculation of each other whereas A C E and G are “independent” in memory. The second one doesn’t make a whole lot of sense except that the CPU may decide that the very tight accesses of A and C are too “hot” to be safely pipelined and force a pipeline stall.

Good, thorough analysis of the subject. Are the caches line sizes same for both 32 and 64 bit processors?

For a thorough analysis of all these effects and more from 2007, with more pretty graphs, see Ulrich Drepper’s fine paper on this topic.

http://people.redhat.com/drepper/cpumemory.pdf

Very nice explanation of some challenging concepts. Thanks for the good primer on this!

techinterview:

Different processors have potentially different cache line sizes, and the cache line size does not necessarily depend on whether it’s a 32 or 64-bit architecture. Since 64-bit machines have bigger pointers, perhaps largers cache lines would make sense.

Pentiums used to have 32-byte cache lines, but I’m not exactly sure when did Intel processors transition to 64-byte cache lines. And Itanium 2 had 128-byte cache lines.

Thanks for this. Really good article.

[…] Gallery of Processor Cache Effects – “Most of my readers will understand that cache is a fast but small type of memory that stores recently accessed memory locations. This description is reasonably accurate, but the “boring” details of how processor caches work can help a lot when trying to understand program performance…” […]

Great Article…. You made it look so easy to understand….

I wish I could have found such article during my Engineering.

Before function UpdateEveryKthByte I believe the text should read "65,536 chunks" instead of "65,536 slots".

Thanks for the nice article.

Thanks for the helpful primer. In the discussion of cache associativity, I started wondering if the impact of your 8-way L1 cache should be visible on the charts of step size versus array size. Given 32 KB L1 data cache with 64-byte cache lines, we have 512 slots and 512/8=64 sets. Also, accesses that differ by 64×64=4096 bytes will compete for an 8-way associative slot. However, only 32*1024/4096=8 competing sets fit in cache. So, a step size of 256 will result in 16 accesses for every 4096 bytes and yet L1 is only 8-way associative. This would make for longer blue lines because it re-inforces the L2 trend. Not sure if there is a clearer way to see this.

Intresting post. Thanks

The ACEG vs AC thing is pretty well explained by the section before.

If you look at it you have 4 cores. 2 of those cores have a shared level 2.

With ACEG you can have the following combos:

Core1 = A, Core2 = C, Core3 = E, Core4 = G (2 L2 Invalidates).

Core1 = A, Core2 = E, Core3 = C, Core4 = G (No L2 invalidates).

Core1 = A, Core2 = G, Core3 = C, Core4 = E (No L2 invalidates).

However you look at it you will be suffering less of the shared L2 invalidates than the L2 invalidates. However with just AC you have the following combos (Obviously there are different combos but 66% of the time you won’t be suffering the invalidate).

Core1=A, Core2=C, Core3=idle, Core4=idle (L2 invalidates).

Core1=idle, Core2=A, Core3=C, Core4=idle(no invlidates).

Plus other combos (Obviously).

But, now you are suffering the L2 invalidates 50% of the time. So while you may be doing less work you are, by virtue of doing less work, causing the L2 to flush more regularly and thus more cache misses to occur.

I think

Bart: Thanks for the explanation! That makes a lot of sense. I’ll add your explanation to the article.

Goz: In that test, all fields are getting updated from a single thread. I understand that the thread may migrate between cores, but do you think that the impact would be serious enough to cause the measured effect?

Ahh. I see. Shoulda read that better

Interesting .. I’ll have a think about that one

Perhaps ACEG each get their own core, thus the cache is always fresh. But AC gets alternated between cores 1&2 on odd iterations, and cores 3&4 on even iterations, thus causing more invalidations across cores.

I’ve managed to re-create exactly this effect under C++. Thus its not something weird being done by the C# compiler (Though admittedly it was a lot harder to stop the compiler just replacing the code with A = 2000000000 etc ;)). I’ve inspected the underlieing assembler as well and the loop is identical for the A++;B++;C++;D++; and the A++;C++;E++;G++; but the latter is over twice the speed of the former! This must, therefore, be a cache effect as you suggested. One would assume every one of the numbers would sit in the cache so somewhere along the line something is causing the cache to flush.

All I’ve noticed that this appears to have something to do with 8 bytes. If I change the code to use 16 shorts then I get the same effect with the lightning fast 4 way copy when 4 shorts apart.

I’m going to find out more on this because this is strange

I should also add that if I do 8 64-bit ints then performance wins on the 3rd case with 1st and 2nd being about the same.

I’ve posted up something on stackoverflow.com to see if anyone can help figure this issue out!

http://stackoverflow.com/quest.....under-msvc

Its got me properly lost!

[…] Gallery of Processor Cache Effects Ano ba ang cache at paano ito gumagana? (hindi ito datong ha) […]

[…] to access pixels in pixel buffer – you supposed to use scanlines, that is faster. Here is some interesting article about it. If you don’t have something to read, here is also nice CPU memory […]

WOW!!

Dude you are SO added to my iGoogle. Looking forward to reading more from you!!

Regards from Holland,

GJ

[…] 15, 2010 by Herb Sutter My colleague Igor Ostrovsky has written a useful summary of seven cache memory effects that every advanced developer should know about for their performance impact, particularly as we […]

Igor,

My guess on number 7 goes to some strange limitation of the store-forwarding, memory disambiguation hardware. (e.g. doing A.C A.C in quick sequence causes store forwarding to fail.)

That might be verifyable using performance monitoring events.

Marc

http://people.redhat.com/drepper/cpumemory.pdf

Longer, but better coverage.

[…] cache coherence is King. See here for an accessible discussion by IBM’s Paul McKenney and here for some remarkable examples from Igor Ostrovsky. This indicates that if you want the highest […]

[…] a kérdéses tömbelem kiolvasása ugyanannyiba fog kerülni. Részletesen, meg hasonló okosságok itt. Olvasd, […]

About the false sharing, another way to see the false sharing effect is the one here described:

http://cpp-today.blogspot.com/.....again.html

Great article! Really takes me back to my Computer Architecture classes. This makes me want to write about using blocks when doing matrix-matrix multiplication to keep more of your data in cache.

[…] PDRTJS_settings_984382_post_216 = { "id" : "984382", "unique_id" : "wp-post-216", "title" : "Processor+Cache+Effects", "item_id" : "_post_216", "permalink" : "http%3A%2F%2Fheeha.wordpress.com%2F2010%2F02%2F24%2Fprocessor-cache-effects%2F" } Processor Cache Effects […]

Thanks for the article Igor, it was a delight to read. Have you considered a follow up article that addresses the effects of TLB cache size when virtually addressing memory?

@Justin Paver: Thanks. Virtual memory is on my stack of possible future topics.

Hi Igor!

This was an excellent presentation on cache effects – thank you for putting in the work to create this. The thing I am really curious about – what are the tools you used to create the graphs? (Both timing and instruction dependencies)

I occasionally need to produce things like this and am always looking to improve my tool set.

@Rachel Blum: Thanks, great to hear you liked the article.

These are the tools I used:

– Line charts: Excel

– Instruction dependencies: Visio

– Heat maps: Generated the bitmap programmatically in C# and then labeled it by hand in Paint.NET (a Photoshop equivalent)

So, nothing too special.

[…] Gallery of Processor Cache Effects […]

[…] Link Categories: 1 Comments (0) Trackbacks (0) Leave a comment Trackback […]

[…] Some more stuff on processor cache effects. […]

Hi,

I have run example 1 on Intel Atom N270 @ 1.60Ghz (single core)

24kB L1 Data cache

32kB L1 Instr. cache

512kB L2

Results for different step values are not as predicted.

with i++ (K ==1) getting ~1500ms

with i+=2 (K ==2) getting ~1000ms

with i+=16 (K ==16) getting ~400ms

Any explanation ?

Wal,

Your numbers are surprisingly high. In my test, K=1 took 80ms, while in yours it took 1500ms.

– Did you use the array with 64*1024*1024 elements, as my example does? Or did you use a different array length?

– Did you use the C# code sample, or did you port it to a different language?

– Did you build in Release mode? Did you run with no debugger attached (outside of VS, or by CTRL+F5)?

Hopefully the answers to these questions will point us to the source of the difference.

Igor

Igor,

I was running from within a [TestMethod] as I like the convenience this provides.

I used the same size array and used C# but can only guess how MSTest runs the Test methods.

I moved the code to a Console app and ran it standalone, Release mode, the numbers are:

with i++ (K ==1) getting 540ms

with i+=2 (K ==2) getting 462ms

with i+=16 (K ==16) getting 456ms

note, i ran each K loop 10 times and averaged the time to smooth.

Results seem more aligned, Cheers

The following link does a decent job of explaining what can happen…

http://gcc.gnu.org/onlinedocs/.....ption.html

[…] Ostrovsky – Gallery of Processor Cache Effects, Bookmark […]