The memory model is a fascinating topic – it touches on hardware, concurrency, compiler optimizations, and even math.

The memory model defines what state a thread may see when it reads a memory location modified by other threads. For example, if one thread updates a regular non-volatile field, it is possible that another thread reading the field will never observe the new value. This program never terminates (in a release build):

class Test { private bool _loop = true; public static void Main() { Test test1 = new Test(); // Set _loop to false on another thread new Thread(() => { test1._loop = false;}).Start(); // Poll the _loop field until it is set to false while (test1._loop == true) ; // The loop above will never terminate! } }

There are two possible ways to get the while loop to terminate:

- Use a lock to protect all accesses (reads and writes) to the _loop field

- Mark the _loop field as volatile

There are two reasons why a read of a non-volatile field may observe a stale value: compiler optimizations and processor optimizations.

In concurrent programming, threads can get interleaved in many different ways, resulting in possibly many different outcomes. But as the example with the infinite loop shows, threads do not just get interleaved – they potentially interact in more complex ways, unless you correctly use locks and volatile fields.

Compiler optimizations

The first reason why a non-volatile read may return a stale value has to do with compiler optimizations. In the infinite loop example, the JIT compiler optimizes the while loop from this:

while (test1._loop == true) ;

To this:

if (test1._loop) { while (true); }

This is an entirely reasonable transformation if only one thread accesses the _loop field. But, if another thread changes the value of the field, this optimization can prevent the reading thread from noticing the updated value.

If you mark the _loop field as volatile, the compiler will not hoist the read out of the loop. The compiler will know that other threads may be modifying the field, and so it will be careful to avoid optimizations that would result in a read of a stale value.

The code transformation I showed is a close approximation of the optimization done by the CLR JIT compiler, but not completely exact.

The full story is that the assembly code emitted by the JIT compiler will store the value test1._loop in the EAX register. The loop condition will keep polling the register, and will read test1._loop from memory again. Even when the thread is pre-empted, the CPU registers get saved. Once the thread is again scheduled to run, the same stale EAX register value will be restored, and the loop never terminates.

The assembly code generated by the while loop looks as follows:

00000068 test eax,eax 0000006a jne 00000068

If you make the _loop field volatile, this code is generated instead:

00000064 cmp byte ptr [eax+4],0 00000068 jne 00000064

If the _loop field is not volatile, the compiler will store _loop in the EAX register. If _loop is volatile, the compiler will instead keep the test1 variable in EAX, and the value of _loop will be re-fetched from memory on each access (by “ptr [eax+4]”).

From my experience playing around with the current version of the CLR, I get the impression that these kinds of compiler optimizations are not terribly frequent. On x86 and x64, often the same assembly code will be generated regardless of whether a field is volatile or not. On IA64, the situation is a bit different – see the next section.

Processor optimizations

On some processors, not only must the compiler avoid certain optimizations on volatile reads and writes, it also has to use special instructions. On a multi-core machine, different cores have different caches. The processors may not bother to keep those caches coherent by default, and special instructions may be needed to flush and refresh the caches.

The mainstream x86 and x64 processors implement a strong memory model where memory access is effectively volatile. So, a volatile field forces the compiler to avoid some high-level optimizations like hoisting a read out of a loop, but otherwise results in the same assembly code as a non-volatile read.

The Itanium processor implements a weaker memory model. To target Itanium, the JIT compiler has to use special instructions for volatile memory accesses: LD.ACQ and ST.REL, instead of LD and ST. Instruction LD.ACQ effectively says, “refresh my cache and then read a value” and ST.REL says, “write a value to my cache and then flush the cache to main memory”. LD and ST on the other hand may just access the processor’s cache, which is not visible to other processors.

For the reasons explained in this section and the previous sections, marking a field as volatile will often incur zero performance penalty on x86 and x64.

The x86/x64 instruction set actually does contains three fence instructions: LFENCE, SFENCE, and MFENCE. LFENCE and SFENCE are apparently not needed on the current architecture, but MFENCE is useful to go around one particular issue: if a core reads a memory location it previously wrote, the read may be served from the store buffer, even though the write has not yet been written to memory. [Source] I don’t actually know whether the CLR JIT ever inserts MFENCE instructions.

Volatile accesses in more depth

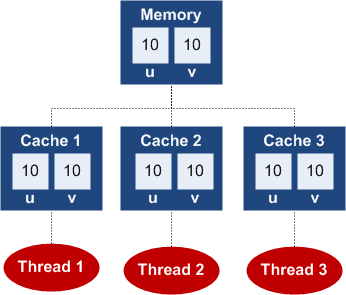

To understand how volatile and non-volatile memory accesses work, you can imagine each thread as having its own cache. Consider a simple example with a non-volatile memory location (i.e. a field) u, and a volatile memory location v.

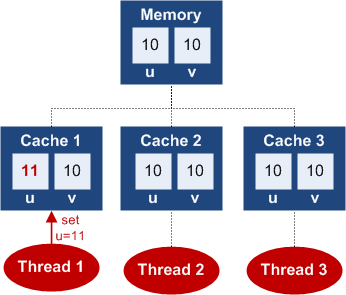

A non-volatile write could just update the value in the thread’s cache, and not the value in main memory:

However, in C# all writes are volatile (unlike say in Java), regardless of whether you write to a volatile or a non-volatile field. So, the above situation actually never happens in C#.

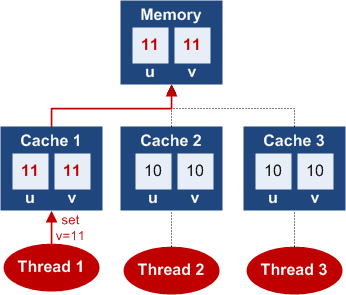

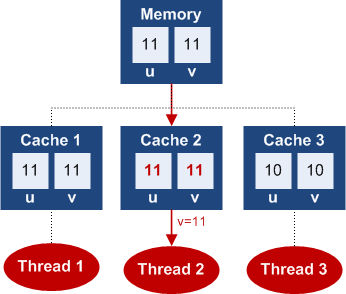

A volatile write updates the thread’s cache, and then flushes the entire cache to main memory. If we were to now set the volatile field v to 11, both values u and v would get flushed to main memory:

Since all C# writes are volatile, you can think of all writes as going straight to main memory.

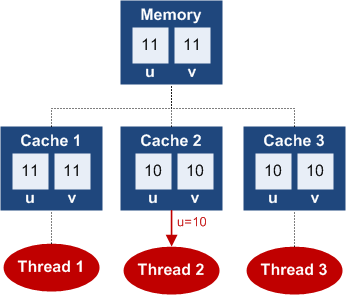

A regular, non-volatile read can read the value from the thread’s cache, rather than from main memory. Despite the fact that thread 1 set u to 11, when thread 2 reads u, it will still see value 10:

When you read a non-volatile field in C#, a non-volatile read occurs, and you may see a stale value from the thread’s cache. Or, you may see the updated value. Whether you see the old or the new value depends on your compiler and your processor.

Finally, let’s take a look at an example of a volatile read. Thread 2 will read the volatile field v:

Before the volatile read, thread 2 refreshes its entire cache, and then reads the updated value of v: 11. So, it will observe the value that is really in main memory, and also refresh its cache as a bonus.

Note that the thread caches that I described are imaginary – there really is no such thing as a thread cache. Threads only appear to have these caches as an artifact of compiler and processor optimizations.

One interesting point is that all writes in C# are volatile according to the memory model as documented here and here, and are also presumably implemented as such. The ECMA specification of the C# language actually defines a weaker model where writes are not volatile by default.

You may find it surprising that a volatile read refreshes the entire cache, not just the read value. Similarly, a volatile write (i.e., every C# write) flushes the entire cache, not just the written value. These semantics are sometimes referred to as “strong volatile semantics”.

The original Java memory model designed in 1995 was based on weak volatile semantics, but was changed in 2004 to strong volatile. The weak volatile model is very inconvenient. One example of the problem is that the “safe publication” pattern is not safe. Consider this example:

volatile string[] _args = null;

public void Write() { string[] a = new string[2]; a[0] = "arg1"; a[1] = "arg2"; _args = a; ... } public void Read() { if (_args != null) { // Under weak volatile semantics, this assert could fail! Debug.Assert(_args[0] != null); } }

Under strong volatile semantics (i.e., the .NET and C# volatile semantics), a non-null value in the _args field guarantees that the elements of _args are also not null. The safe publication pattern is very useful and commonly used in practice.

Memory model and .NET operations

Here is a table of how various .NET operations interact with the imaginary thread cache:

| Construct | Refreshes thread cache before? | Flushes thread cache after? | Notes |

| Ordinary read | No | No | Read of a non-volatile field |

| Ordinary write | No | Yes | Write of a non-volatile field |

| Volatile read | Yes | No | Read of volatile field, or Thread.VolatileRead |

| Volatile write | No | Yes | Write of a volatile field – same as non-volatile |

| Thread.MemoryBarrier | Yes | Yes | Special memory barrier method |

| Interlocked operations | Yes | Yes | Increment, Add, Exchange, etc. |

| Lock acquire | Yes | No | Monitor.Enter or entering a lock {} region |

| Lock release | No | Yes | Monitor.Exit or exiting a lock {} region |

For each operation, the table shows two things:

- Is the entire imaginary thread cache refreshed from main memory before the operation?

- Is the entire imaginary thread cache flushed to main memory after the operation?

Disclaimer and limitations of the model

This blog post reflects my personal understanding of the .NET memory model, and is based purely on publicly available information.

I find the explanation based on imaginary thread caches more intuitive than the more commonly used explanation based on operation reordering. The thread cache model is also accurate for most intents and purposes.

To be even more accurate, you should assume that the thread caches can form an arbitrary large hierarchy, and so you cannot assume that a read is served only from two possible places – main memory or the thread’s cache. I think that you would have to construct a somewhat of a clever case in order for the cache hierarchy to make a difference, though. If anyone is aware of a case where the hierarchical thread cache model makes a prediction different from the reordering-based model, I would love to hear about it.

If you are interested in the .NET memory model, I encourage you to read Understand the Impact of Low-Lock Techniques in Multithreaded Apps in the MSDN Magazine, and the Memory model blog post from Chris Brumme.

Read more of my articles:

Gallery of processor cache effects

What really happens when you navigate to a URL

Human heart is a Turing machine, research on XBox 360 shows. Wait, what?

7 tricks to simplify your programs with LINQ

And if you like my blog, subscribe!

I’m not sure I understand what you mean when you say a volatile read refreshes the entire cache, not just the read value.

Are we talking the “thread” cache here or hardware cache. (ie. the whole L1, L2, L3 cache chain) Or more like a read through operation? (I guess not since that is weak volatile semantics as I understand you)

If we are talking a complete flush of hardware caches, that has to be “huge” overhead as I see it, not complying with your statement: marking a field as volatile will often incur zero performance penalty on x86 and x64.

Great question. The semantics in x86 / x64 are pretty close to all writes going to main memory and all reads coming from main memory. (There is one interesting difference, apparently – if a thread writes and then reads the same value, the read may be served from the thread’s cache.)

As far as I understand, each write goes to the cache. But, MESI protocol is used to ensure that if another core reads the written value, the reader will know where to get the most recent copy of the value.

I will add these clarifications to the article.

“and then flushes the entire cache to main memory”

Do you mean exactly that memory location, the cache line or really the entire cache?

OmariO: See my reply to Toke Noer. Logically, the entire cache is flushed to main memory on each (volatile) write.

What really happens is that each write goes into the cache. To provide the illusion of writes going into main memory, there is signaling in place – if another core reads the modified memory location, it will know that it needs to get that value from another core’s cache. This is called the MESI protocol.

I’ll consider writing a separate article on what actually happens on x86, since there seems to be interest in the topic.

Incredibly useful info! This is probably the best explanation of volitile I’ve found to date.

Excellent article on volatile and generic ! Yes, in x86{_64} specifically, have never seen the non volatile read races as all recent gcc compilers always generate a “lea” instruction instead of a load from register for either non-optimized (volatile) or optimized case. The memory barrier has to be enforced sometimes pertaining to the IO instructions through an “asm volatile(“”:::”memory”)”, memory barrier which is equal to the fencing instructions you mentioned.

Maybe you can make thread cache references in the article more explicit by stating an example of 2 threads scheduled on different cores/CPU’s in which case, it maps to a different CPU cacheline. But its interesting to note when you say that the volatile reads/writes results in flushing of the entire CACHE rather than the corresponding CACHELINE triggering the MESI for the other CPU cacheline sync. Are you sure its an entire D-CACHE sync for a volatile read/write instead of a cacheline sync ?

If thats actually the case, then its really very heavy. Maybe you can check degradation with “rdtsc” for volatile and compare with non-volatile above.

I doubt that its not the case. In MESI: http://en.wikipedia.org/wiki/MESI_protocol, if you read there, it talks in terms of lines for caches. Now when a line is in modified state as a result of the write, it would SNOOP (or intercept) reads from other invalid caches to the main memory for the MODIFIED line that it holds and have them update their line with the data from the modified cache line. So it has be a cacheline based sync. instead of cache since a line is mapped to the memory and modified lines can snoop for reads to that location and have them update their lines.

You might enjoy The LoseThos Operating System judging by your interest. It identity-maps all virtual to physical memory and, naturally, all tasks on all cores share just one map! No distinction between “thread” and “process”.

The compiler has a “lock{}” command which is necessary for multicored read-modify-and-write. With multicore chips, as opposed to separate chips, the cache is kept coherent.

Anyway, give LoseThos a spin, you’ll be glad you did. Tons of neat features.

Does the Monitor.Wait refreshes the Memory Cache? Or should I add a Thread.MemoryBarrier after the Monitor.Wait (or use other synchronized ways of reading/writing variables) if I want to know the newest value of a variable? For example:

public int FieldThatCanBeModifiedByOtherThreads;

public void MyMethod() {

lock (this) {

FieldThatCanBeModifiedByOtherThreads = 1;

Monitor.Wait(this);

if (FieldThatCanBeModifiedByOtherThreads != 1) {

// Do something

}

}

}

public void InAnotherThread() {

lock (this) {

FieldThatCanBeModifiedByOtherThreads = 2;

Monitor.Pulse(this);

FieldThatCanBeModifiedByOtherThreads = 3;

}

}

Is the if in the MyMethod guaranteed to read the “newest” value (or at least a value as fresh as the Monitor.Pulse/Monitor.Exit that let the Wait continue)? So is it guaranteed to read 2 or 3 but not 1?

@A R Karthick: Logically, the entire cache is flushed on each write. This is important for the “safe publication” pattern – you can initialize some data structure and write a reference to it into a volatile field. Any readers who see the reference to the data structure are guaranteed to see its contents in a fully initialized state. Admittedly, I don’t fully understand all of the details in the hardware that make this guarantee happen.

@Massimiliano: I think that it is safe to assume that Monitor.Wait and Monitor.Pulse are full barriers. But, I cannot find it somewhere documented explicitly.

This same point about

Monitor.Pulsewas raised again (good timing!) here: http://stackoverflow.com/questions/2431238/I think that demonstrates further that it is (at best) unclear. If any official word on this question does surface, I’d be hugely interested.

Great explanation!

I have a question I can’t seem to get answered; what happens with respect to [ThreadStatic] variables? It is my understanding that static variables marked with [ThreadStatic] could still suffer from the same mutlicore issues that volatile is meant to solve.

Chris: Can you give an example of the case you are concerned about?

Normally, thread-static fields should not have a problem.

A thread-static field has a separate copy of the value for each field. So, a thread cannot possibly see a value written by another thread, simply by design.

For example, if one thread is looping and polling a thread-static field, it is OK to hoist the read out of the loop. If another thread modifies the thread-static field, it will modify its own copy, not the one that the looping thread is checking.

Igor: Please disregard my comment – I was fighting with a threading bug all day and by the time I came upon this entry, my brain was working at 1/2 capacity.

Your explanation makes perfect sense. My question was a case of classic overthinking the problem.

Glad I found this blog – it is so hard to find information about these subjects. Following you on twitter now.

Thanks for the great writeup.

[…] just read an excellent article on the memory model in .Net and C# as it relates to threading, written my Microsoft’s Igor […]

Thank you for such a great explanation.

Let’s say we added a second field to your Test class “private Object _loopLock = new Object();” and used it to protect all reads and writes to _loop. We know from the language spec that all fields are initialized to their default values (in this case null) (http://msdn.microsoft.com/en-u.....71%29.aspx). As such, couldn’t the “set thread” have a stale initial version of _loopLock in it’s cache (i.e. “null”) and attempt to lock on it? This seems to raise a chicken and egg problem of requiring a second lock to protect the first lock. And so on and so forth…

Hi Matt,

The safe thing to do would be to publish the Test object by writing it into a volatile field.

1. The writer first writes all fields on the Test object, and then writes the reference to the Test object into a volatile field.

2. The reader first reads the reference from a volatile field, and then reads fields of the Test object.

If the object is published via a volatile field, the reader cannot possibly observe the state of the object before it was initialized by the constructor. An alternative solution is to write the object it into a regular field under a lock, and then read it under the same lock.

HOWEVER, in today’s .NET implementation on today’s hardware, I understand that you may be able to get away with removing the volatile modifier on the publication field, even though I would recommend against doing that. For example, Vance Morrison’s article (http://msdn.microsoft.com/en-u.....63715.aspx) on memory models suggests that this lazy initialization pattern is safe:

public class LazyInitClass {

private static LazyInitClass myValue = null;

public static LazyInitClass GetValue() {

if (myValue == null)

myValue = new LazyInitClass();

return myValue;

}

};

I find this particular example very tricky, even trickier than what Vance’s article admits. I understand that the order of writes to myValue and myValue fields is guaranteed by the strong ordering of writes. But, the order of reads has to be enforced using some rule as well. My rough understanding is that x86/x64 will avoid reordering the reads because there is a data dependency between them.

Unfortunately, in this area, even experts disagree on what precisely is and what isn’t guaranteed (example), and so I am not going to claim to know.

I hope this helps. The summary is this: publish the object either via a volatile field or a field protected by a lock, and you’ll be fine. In typical cases, you’ll have some sort of a synchronization between the reader and the writer to pass the object anyways, and that synchronization will be sufficient to insert the appropriate barriers.

This is one of the better explanations of the .NET memory model I have see. You have a done a great job describing the behavior using the “thread cache” illustration. Joe Albahari has a great write up on the topic as well, but uses the instruction reordering terminology. The astute reader of both can appreciate how they compliment each other.

Good article. I appreciate the behind-the-scenes explanation; the more I seek to optimize C# code, the more I find myself digging into how the IL/JIT compilers map back to actual processor instructions.

This topic seems particular useful for out-of-process services that are intended to provide asynchronous handling of multiple tasks (such as for a high-traffic website that relies on a SOA to display aggregated data).

Very accessible, figurative explanation of how the things actually work with a volatile keyword. Thanks!

Speaking of Alahari, here volatile keyword he seems to say the opposite of what you say on the volatile keyword. In his “IfYouThinkYouUnderstandVolatile” example he says that a write and a read could be swapped, because the write can be deferred and the read would then read a “stale” value. The analysis of Thread.VolatileWrite and Thread.VolatileRead seems to confirm this. This makes my head explode (he references this article Volatile reads and writes, and timeliness from Joe Duff) that in the last rows seems to say the same thing.

(he references this article Volatile reads and writes, and timeliness from Joe Duff) that in the last rows seems to say the same thing.

Woops links lost…

http://www.albahari.com/thread.....le_keyword

http://www.bluebytesoftware.co.....iness.aspx

I tried running the example at the top of the article. It terminates immediately. Did something change with the Framework?

Sorry… switched to release build and it doesn’t terminate maybe 1 in 5 tries.

Hello sir,

know you answer this my question. I ask you, because it is related to this article. Can you help me here please:

http://social.msdn.microsoft.c.....f41a9f84d1

Nice article. Thanks.

Thank you very much for explaining this issue, after reading through several websites which only showed C# sources and gave really weak explanation, this article told me exactly what I was looking for.

[…] Found here: http://igoro.com/archive/volat.....explained/ […]

According to your last table’s combination of refresh cache before read and after write, Volatile read is the same as Lock Acquire, Volatile Write is the same as Lock Release. However, lock also have an effect of telling other threads to hold on to their access to the cache.

Essentially, volatile has to do with cache freshness, and lock has to do with cache accessibility. It would be interesting to see how do you use your thread cache model to explain both freshness and accessibility together.