Parity protection is a common technique for reliable data storage on mediums that may fail (HDDs, SSDs, storage servers, etc.) Calculation of parity uses tools from algebra in very interesting ways, in particular in the dual parity case.

This article is for computer engineers who would like to have a high-level understanding of how the dual parity calculation works, without diving into all of the mathematical details.

Single parity

Let’s start with the simple case – single parity. As an example, say that you need to reliably store 5 data blocks of a fixed size (e.g., 4kB). You can put the 5 data blocks on 5 different storage devices, and then store a parity block on a 6th device. If any one device fails, you can still recompute the block from the failed device, and so you haven’t lost any data.

A simple way to calculate the parity P is just to combine the values a, b, c, d, e with the exclusive-or (XOR) operator:

P = a XOR b XOR c XOR d XOR e

Effectively, every parity bit protects the corresponding bits of a, b, c, d, e.

- If the first parity bit is 0, you know that the number of 1 bits among the first bits of a, b, c, d, e is even.

- Otherwise the number of 1 bits is odd.

Given a, b, c, e and P, it is easy to reconstruct the missing block d:

d = P XOR a XOR b XOR c XOR e

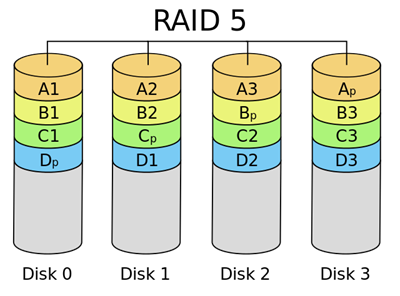

Straightforward so far. Exclusive-or parity is commonly used in storage systems as RAID-5 configuration:

RAID-5 uses the exclusive-or parity approach, except that the placement of parity is rotated among the storage devices. Parity blocks gets more overwrites than data blocks, so it makes sense to distribute them among the devices.

What about double parity?

Single parity protects data against the loss of a single storage device. But, what if you want protection against the loss off two devices? Can we extend the parity idea to protect the data even in that scenario?

It turns out that we can. Consider defining P and Q like this:

P = a + b + c + d + e

Q = 1a + 2b + 3c + 4d + 5e

If you lose any two of { a, b, c, d, e, P, Q }, you can recover them by plugging in the known values into the equations above, and solving for the two unknowns. Simple.

For example, say that { a, b, c, d, e } are { 10, 5, 8, 13, 2 }. Then:

P = 10 + 5 + 8 + 13 + 2 = 38

Q = 10 + 2 × 5 + 3 × 8 + 4 × 13 + 5 × 2 = 106

If you lost c and d, you can recover them by solving the original equations.

How come you can always solve the equations?

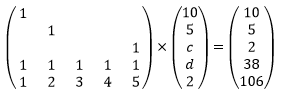

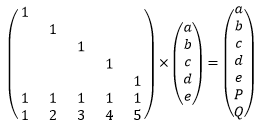

To explain why we can always solve the equations to recover two missing blocks, we need to take a short detour into matrix algebra. We can express the relationship between a, b, c, d, e, P, Q as the following matrix-vector multiplication:

We have 7 linear equations of 5 variables. In our example, we lose blocks c and d and end up with 5 equations of 5 variables. Effectively, we lost two rows in the matrix and the two matching rows in the result vector:

This system of equations is solvable because the matrix is invertible: the five rows are all linearly independent.

In fact, if you look at the original matrix, any five rows would have been linearly independent, so we could solve the equations regardless of which two devices were missing.

But, integers are inconvenient…

Calculating P and Q from the formulas above will allow you to recover up to two lost data blocks.

But, there is an annoying technical problem: the calculated values of P and Q can be significantly larger than the original data values. For example, if the data block is 1 byte, its value ranges from 0 to 255. But in that case, the Q value can reach 3825! Ideally, you’d want P and Q to have the same range as the data values themselves. For example, if you are dealing with 1-byte blocks, it would be ideal if P and Q were 1 byte as well.

We’d like to redefine the arithmetic operators +, –, ×, / such that our equations still work, but the values of P and Q stay within the ranges of the input values.

Thankfully, this is a well-studied problem in algebra. One simple approach that works is to pick a prime number M, and change the meaning of +, –, and × so that values "wrap around" at M. For example, if we picked M=7, then:

6 + 1 = 0 (mod 7)

4 + 5 = 2 (mod 7)

3 × 3 = 2 (mod 7)

etc.

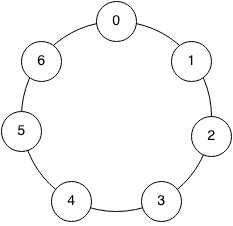

Here is how to visualize the field:

Since 7 is a prime number, this is an example of a finite field. Additions, subtractions, multiplications and even divisions work in a finite field, and so our parity equations will work even modulo 7. In some sense, finite fields are more convenient than integers: dividing two integers does not necessarily give you an integer (e.g., 5 / 2), but in a finite field, division of two values always gives you another value in the field (e.g., 5 / 2 = 6 mod 7). Division modulo a prime is not trivial to calculate – you need to use the extended Euclidean algorithm – but it is pretty fast.

But, modular arithmetic forms a finite field only if the modulus is prime. If the modulus is composite, we have a finite ring, but not a finite field, which is not good enough for our equations to work.

Furthermore, in computing we like to deal with bytes or multiples of bytes. And, the range of a byte is 256, which is definitely not a prime number. 257 is a prime number, but that still doesn’t really work for us… if the inputs are bytes, our P and Q values will sometimes come out as 256, which doesn’t fit into a byte.

Finally, Galois Fields

The finite fields we described so far are all of size M, where M is a prime number. It is possible to construct finite fields of size Mk, where M is a prime number and k is a positive integer.

The solution is called a Galois field. The particular Galois field of interest is GF(28) where:

- Addition is XOR.

- Subtraction is same as addition; also XOR.

- Multiplication can be implemented as gf_ilog[gf_log[a] + gf_log[b]], where gf_log is a logarithm in the Galois field, and gf_ilog is its inverse. gf_log and gf_ilog can be tables computed ahead of time.

- Division can then calculated as gf_ilog[gf_log[a] – gf_log[b]], so we can reuse the same tables used by multiplication.

The actual calculation of the logarithm and inverse logarithm tables is beyond the scope of the article, but if you would like to learn more about the details ,there are plenty of in-depth articles on Galois fields online.

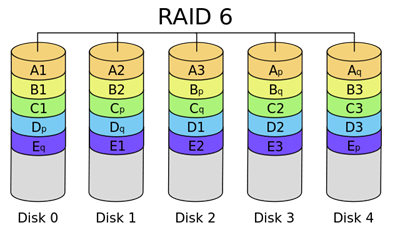

In the end, a GF(28) field gives us exactly what we wanted. It is a field of size 28, and it gives us efficient +, –, ×, / operators that we can use to calculate P and Q, and if needed recalculate a lost data block from the remaining data blocks and parities. And, each of P and Q perfectly fits into a byte. The resulting coding is called Reed-Solomon error correction, and that’s the method used by RAID 6 configuration:

Final Comments

The article explains the overall approach to implementing a RAID-6 storage scheme based on GF(28). It is possible to use other Galois fields, such as the 16-bit GF(216), but the 8-bit field is most often used in practice.

Also, it is possible to calculate additional parities to protect against additional device failures:

R = 12a + 22B + 32C + 42D + 52E

S = 13a + 23B + 33C + 43D + 53E

…

Finally, the examples in this article used 5 data blocks per stripe, but that’s an arbitrary choice – any number works, as far as the parity calculation is concerned.

Related Reading

The mathematics of RAID-6, H. Peter Anvin.

I guess my monthly checkup of this blog finally paid off.

It is great to have you back. I noticed that you no longer work for Microsoft, but for a start-up. That probably explains the lack of blog posts recently.

Although I already knew how raid-6 dual parity worked, this was still an enjoyable read. I hope there will be more blog posts of you in the (near) future.

[…] How RAID-6 dual parity calculation works – […]

5 / 2 = 6 mod 7, no? You have “3” instead above.

@Nathan: You are correct. Thanks!

@Grayskin: Thanks, I much appreciate your comments! I am hoping to find the time to write more. I know that some bloggers can generate posts with very little effort. For me, thinking through a post, working through the details and writing it up often takes more than a weekend, so it can be hard to find the time. But, I’m hoping to be able to find more time to do it in the upcoming months.

Be mindful of hard errors which are the reason that RAID no longer lives up to its original promise.

If one of the drives fails, then during the rebuild if you get an error – the entire array will die.

http://www.zdnet.com/article/w.....g-in-2009/

SATA drives are commonly specified with an unrecoverable read error rate (URE) of 10^14. Which means that once every 12.5 terabytes, the disk will not be able to read a sector back to you.

http://www.lucidti.com/zfs-che.....as-storage

Very nicely written article. I might have to hire you to write content for my data recovery blog. I had written an article here: https://www.data-medics.com/data-recovery-blog/what-is-a-parity/

Where I tried to explain RAID 5 parity in as simple a way as possible. But, seems there’s no simple explanation for RAID 6 without getting into a lot of complex math.

Given your programming, math, and RAID knowledge perhaps you’d be able to help with a project I’ve been working on. I’m trying to figure out a way to separate sets of HEX values pulled from RAID 50 sets into the two sets that all XOR out to each other. I’ve been able to do it just using brute force calculation methods, but I’ve often wondered if there was an easier way to separate the two sets.

Sorry I didnt get how 5 / 2 = 6 mod 7 (in finite field), does someone mind explaining ?

(5 / 2 = 6 mod 7) because (5 = 6 * 2 mod 7).

Newbie Question, Am kinda wondering, why we need an another parity `Q` for RAID-6, I hope RAID-6 will actually requires one more drive(when compare to RAID-5) and we’ve already generated a parity `P`, so why we can’t save the same parity `p` in the fourth drive? shouldn’t it handle two drive failure?

d1—–d2—–d3——d4

1——2——p12—–p12

3——p34—-p34—–4

p56—-p56—-5 ——6

@Bloke: Look at what you’ve drawn and imagine losing drives 1&2 or 3&4. If you lose 1&2 then the first stripe will only have the two parity records p12, not enough info to recreate blocks 1 & 2. If you lose 3&4 then the third stripe will only have p56 and you can’t recreate blocks 5 or 6. It only works if at least one of the blocks you lose is a parity block. If you consider this in a linear algebra sense then any combination that relies on using the two (identical) parity blocks will not be invertible because those two rows are linearly dependent.

You shouldn’t use “byte” if you specifically mean 8 bits. People do that all the time and it’s annoying. If you specifically mean an 8-bit quantity, it’s called an “octet.”